Aug 19, 2025

AI scaling laws reveal a core challenge: bigger models deliver better results, but they consume much more energy. This trade-off between performance and energy use is critical for businesses in the U.S., where electricity costs and sustainability pressures are high.

Here’s the key takeaway: while scaling up models improves output, the energy demands often outweigh the benefits. Companies need smarter tools to balance performance, cost, and energy consumption.

Summary of Solutions:

Key Insight: Transparent energy and performance metrics are crucial for smart AI investments. Tools like NanoGPT and GPT-4.1 nano help businesses stay competitive without overspending or overusing resources.

NanoGPT offers a pay-as-you-go system combined with local data storage, giving users more control over their information while placing a strong emphasis on privacy. This setup supports its energy-efficient design, reliable performance, and budget-friendly operation.

NanoGPT stands out by using resources only when they’re needed, cutting down on the energy waste often associated with systems that run non-stop. Unlike traditional models that keep resources active continuously, NanoGPT’s on-demand approach helps reduce unnecessary energy usage.

Despite its focus on energy efficiency, NanoGPT doesn’t skimp on performance. It provides access to cutting-edge models like ChatGPT, Deepseek, Gemini, Flux Pro, Dall-E, and Stable Diffusion - all through a single, streamlined interface. This unified setup lets users easily switch between tasks like text generation and image creation, all while ensuring consistently high-quality results.

With pricing starting at just $0.10, NanoGPT follows a transparent, usage-based billing model. Users only pay for the compute power they actually use, avoiding the fixed costs often associated with subscription plans. This makes it an accessible option for both individuals and businesses looking to manage their AI expenses effectively.

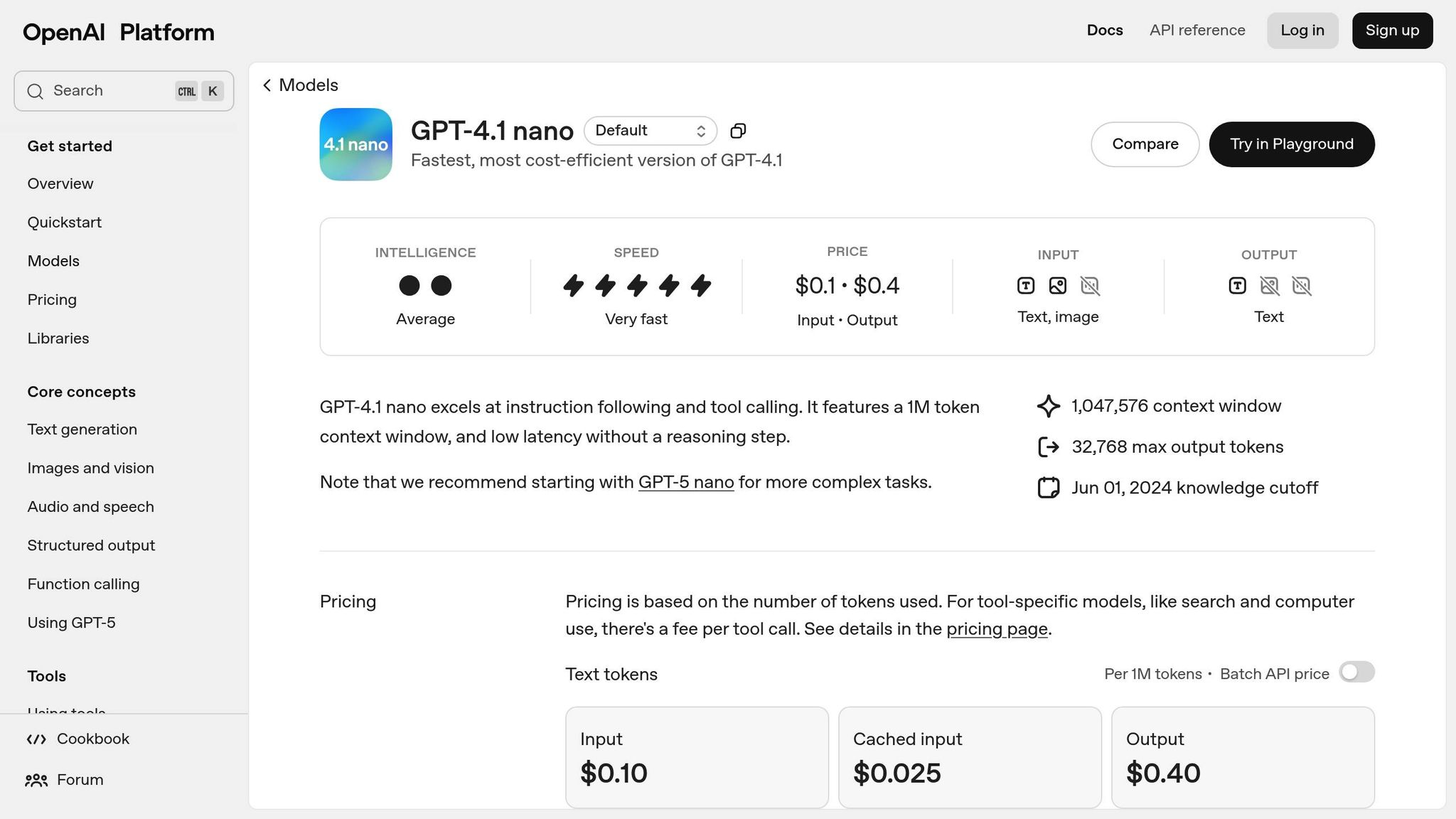

GPT-4.1 nano represents a step forward in balancing powerful AI capabilities with energy-conscious design. Built to align with modern AI scaling principles, this model delivers impressive performance while keeping energy use in check. Its compact and efficient framework ensures advanced functionality without compromising on sustainability.

The architecture of GPT-4.1 nano has been fine-tuned to eliminate unnecessary processing. This means lower energy use during inference while maintaining reliable and consistent task execution.

Even with its smaller size, GPT-4.1 nano proves capable of tackling demanding tasks. From handling intricate reasoning to managing extended conversations and generating code in widely-used programming languages, it achieves these with notable efficiency.

Thanks to its optimized design, GPT-4.1 nano reduces operational costs, particularly in settings with high usage demands. This makes it an attractive choice for businesses looking to adopt cutting-edge AI without the need for heavy infrastructure investments. The cost savings also align well with its environmentally-friendly approach.

By reducing power consumption and lessening the strain on data center cooling systems, GPT-4.1 nano helps lower the overall carbon footprint. This focus on sustainability ensures the model keeps pace with the growing emphasis on energy-conscious technologies.

Compared to models like NanoGPT and GPT-4.1 nano, which have well-documented energy and performance metrics, Claude-3.7 Sonnet remains a bit of a mystery. There’s very little public information available about its technical design, energy efficiency strategies, or how it scales in terms of performance. Because of this lack of transparency, any evaluation of its energy-performance trade-offs should be approached with caution. Until more details are released, its place within current scaling laws remains unclear and warrants further investigation.

Finding the right balance between operational efficiency and performance is a central focus under AI scaling laws. For NanoGPT, its standout features include a straightforward pay-as-you-go pricing structure and a strong emphasis on user privacy. These elements make it accessible while addressing growing concerns over data security.

With NanoGPT's subscription-free, usage-based billing, users can start with minimal investment and scale costs as their needs grow. This pricing approach not only lowers barriers to entry but also offers clarity, allowing users to manage expenses effectively. Additionally, NanoGPT prioritizes privacy by storing data locally, a measure that resonates with today's heightened demand for data protection.

However, these advantages also highlight broader challenges in the AI industry, particularly around transparency. Many platforms fail to provide detailed energy consumption and performance data, despite promoting efficiency or advanced capabilities. This lack of openness can leave organizations struggling to evaluate costs, benefits, or environmental impacts accurately. In contrast, NanoGPT's clear value proposition becomes even more appealing when compared to models with less transparent performance metrics.

AI scaling laws reveal a tricky balancing act: while larger models deliver stronger performance, they also demand significantly higher resources, leading to tough decisions for organizations in the United States.

NanoGPT's usage-based pricing model offers a practical solution by allowing businesses to experiment with different AI models without locking into costly subscriptions. This pay-as-you-go approach makes it easier to test and identify models that strike the right balance between energy use and performance for specific needs. Such flexibility aligns well with the growing focus on cost efficiency and meeting environmental and regulatory expectations.

By prioritizing a privacy-first design, NanoGPT ensures data is stored locally, addressing the increasingly strict data protection regulations in the US. This approach not only simplifies compliance but also reduces hidden risks tied to data security.

For US businesses, demanding clear energy and performance metrics is crucial. Platforms that provide transparent usage data and adaptable pricing empower organizations to make smarter AI investments. This not only helps manage budgets but also supports efforts to meet ecological goals.

Ultimately, there’s no universal solution in AI scaling. Success lies in tailoring the right model to match business objectives while keeping a close eye on total costs and resource demands.

NanoGPT’s pay-as-you-go model is designed to be energy-conscious, ensuring users are only charged for the exact AI model usage they require. This approach avoids the unnecessary energy consumption that comes with running large-scale systems continuously, especially during low-demand periods.

By providing on-demand access to AI models, NanoGPT significantly cuts down on idle resource usage, helping to lower the overall energy impact of AI operations. This model not only supports energy-saving practices but also offers users a more flexible and budget-friendly option.

GPT-4.1 Nano is designed to be both easy on your wallet and mindful of energy use. With pricing starting at just $0.10 per 1 million input tokens and $0.40 per 1 million output tokens, it offers an attractive option for cost-conscious users. Plus, a 75% discount on cached inputs means repetitive tasks become even more economical.

When it comes to energy consumption, GPT-4.1 Nano shines. It uses only 0.454 Wh for handling lengthy prompts, making it one of the most energy-conscious models out there. This blend of affordability and minimal energy use makes it a smart pick for anyone focused on balancing sustainability with budget-friendly solutions.

Understanding energy and performance metrics is crucial for businesses aiming to make smart decisions about their AI models. These metrics help evaluate how efficiently energy is being used and how well a model performs, enabling companies to select solutions that align with their operational priorities and sustainability objectives.

When these metrics are transparent, they create trust, ensure accountability, and help businesses stay in line with environmental regulations. This openness also drives the adoption of AI technologies that balance strong performance with responsible energy use, paving the way for long-term progress and responsible innovation.