Jan 18, 2026

Managing costs in multi-tenant SaaS platforms can be tricky, but it's essential for profitability and scalability. Here's what you need to know:

Key takeaway: Clear visibility, smart resource allocation, and automation are essential for managing costs in multi-tenant environments while maintaining performance and profitability.

Allocating resources effectively ensures tenants get exactly what they need, when they need it, while minimizing wasted capacity. Achieving this balance requires a careful strategy to distribute and scale infrastructure efficiently.

Dynamic resource allocation adjusts capacity in real time through horizontal scaling (adding more instances) and vertical scaling (increasing the capacity of existing resources). Horizontal scaling is especially useful when accommodating a growing number of tenants.

In Kubernetes, there are specific tools to manage this process:

Start with HPA for scaling replicas. Introduce VPA only when absolutely necessary, and avoid using both on the same resource metrics (like CPU), as this could lead to conflicts.

Event-driven scaling provides a more targeted approach compared to traditional CPU or memory-based triggers. By scaling based on specific activities, such as queue length or stream lag, you can better align resources with actual demand. To avoid rapid scaling fluctuations caused by temporary spikes, implement cooldown periods between scaling actions.

The Azure Well-Architected Framework captures this concept well:

The goal of cost optimizing scaling is to scale up and out at the last responsible moment and to scale down and in as soon as it's practical.

Next, let’s explore ways to fine-tune resource allocation and reduce unnecessary provisioning.

Dynamic allocation helps with scaling, but preventing over-provisioning is key to controlling costs. Over-provisioning occurs when resources exceed actual tenant needs, leading to wasted spend. The solution? Right-size resources based on usage patterns rather than planning for worst-case scenarios. Queue-based load leveling can help smooth out demand spikes, reducing the need for excessive resources.

Here are some practical ways to avoid over-provisioning:

Another important strategy is designing for statelessness. Stateless application tiers allow you to quickly add, remove, or reallocate workers between tenants without worrying about data loss. External caching solutions like Redis can support this approach by enabling low-latency data retrieval while maintaining a stateless compute tier. This also eliminates challenges like session affinity.

After allocating resources effectively, keeping a close eye on costs becomes crucial for managing expenses. To make this work, businesses need accurate, tenant-level cost tracking to pinpoint which customers are profitable and which might be draining resources. As the AWS Well-Architected Framework explains:

A SaaS business needs to have a clear picture of how tenants are influencing costs to make strategic decisions about how to build, sell, and operate their SaaS application.

The starting point for cost visibility lies in resource tagging. By adding metadata like tenant-id for specific resources or shard-id for shared infrastructure, you can accurately filter and assign costs. For containerized workloads on ECS or EKS, split cost allocation data makes it possible to break down shared compute and memory expenses for individual business units or teams.

However, when tagging alone isn't enough - like with shared microservices - application instrumentation can step in. Applications can log telemetry data such as API request counts, IOPS, or data usage to calculate each tenant's share of consumption. Standard application monitoring tools often lack the precision needed for billing, so instead, consumption data should be logged and processed asynchronously to maintain both accuracy and performance.

Tracking costs at the tenant level is vital for accountability and smarter pricing strategies. The method you choose depends on how your infrastructure is set up. Account-based isolation offers the easiest and most accurate solution by assigning a dedicated cloud account to each tenant or business unit, ensuring clear cost separation. For more complex setups, tag-based allocation can provide detailed tracking but requires strict tagging practices and governance.

| Allocation Model | Effort Level | Accuracy | Best Use Case |

|---|---|---|---|

| Account-based | Low | High | Suitable for organizations with separate accounts per tenant |

| Business Unit-based | Moderate | High | Works well for grouping multiple accounts under a single team or entity |

| Tag-based | High | High | Ideal for mixed account structures needing granular tracking |

| Instrumentation-based | Very High | Approximate | Useful for shared infrastructure where tagging isn't feasible |

Analyzing the relationship between infrastructure costs and tenant revenue or usage tiers can reveal imbalances. For instance, in some SaaS models, the "Basic" tier might consume more resources than higher-revenue "Advanced" tiers. Insights like these can help adjust pricing or resource limits before profitability takes a hit.

Focus your tracking efforts on services that have the biggest impact. If Amazon S3, for example, only accounts for 1% of your total bill, investing engineering resources in tenant-level tracking for it may not make sense. Prioritize the services driving the majority of your costs.

When it comes to cost accountability, showback and chargeback serve different purposes. Showback is all about transparency - it reports costs to specific entities, like business units or tenants, but doesn't involve actual payments. Chargeback, on the other hand, goes a step further by recovering costs through internal accounting, such as journal vouchers or financial systems. As an AWS whitepaper explains:

Showback is about presentation, calculation, and reporting of charges incurred by a specific entity... Chargeback is about an actual charging of incurred costs to those entities via an organization's internal accounting processes.

| Model | Primary Goal | Payment Flow |

|---|---|---|

| Showback | Visibility & Awareness | No funds are transferred between departments |

| Chargeback | Accountability & Recovery | Departments are billed for their actual usage |

For shared resources, you can either split costs evenly or meter usage precisely using application telemetry. The choice depends on whether you need approximate or exact measurements. As Microsoft's Azure documentation advises:

By understanding the goal of measuring consumption by a tenant, you can determine whether cost allocations need to be approximate or precise.

Automating governance is essential to prevent untagged resources from slipping through. Tools like Azure Policy or AWS Service Control Policies can enforce mandatory tagging standards. Assign roles carefully: for example, "Cost Management Reader" for stakeholders needing visibility and "Cost Management Contributor" for those managing budgets.

Cloud-native tools make it easier to analyze costs and detect anomalies. AWS offers options like Cost Explorer, the Cost & Usage Report (CUR), and Billing Conductor, while Azure provides Cost Management + Billing.

| Tool Category | AWS Tools | Azure Tools |

|---|---|---|

| Core Analysis | AWS Cost Explorer, Cost & Usage Report (CUR) | Azure Cost Management + Billing |

| Shared Resource Tracking | AWS Application Cost Profiler | Custom Telemetry (Event Hubs + Stream Analytics) |

| Anomaly Detection | AWS Cost Anomaly Detection | Azure Advisor Cost Recommendations |

| Real-time Metrics | Amazon CloudWatch, Data Firehose | Azure Monitor, Event Hubs |

Set budget alerts at three levels: 90% for ideal spend, 100% for hitting the target, and 110% for exceeding the budget. For forecasts, alerts should trigger at 110% of the target budget to provide early warnings about potential overruns.

Machine learning-based anomaly detection can help spot unusual spending patterns, such as "noisy neighbor" tenants consuming more than their fair share of resources. This allows you to address the issue before it affects profitability.

Some expenses, like Reserved Instance fees or Savings Plans, can't be tagged upfront. These should be tracked retrospectively by filtering amortized costs using relevant tags in billing reports. To manage storage costs, aggregate older data points.

Multi-Tenant Database Models: Cost vs Isolation Comparison

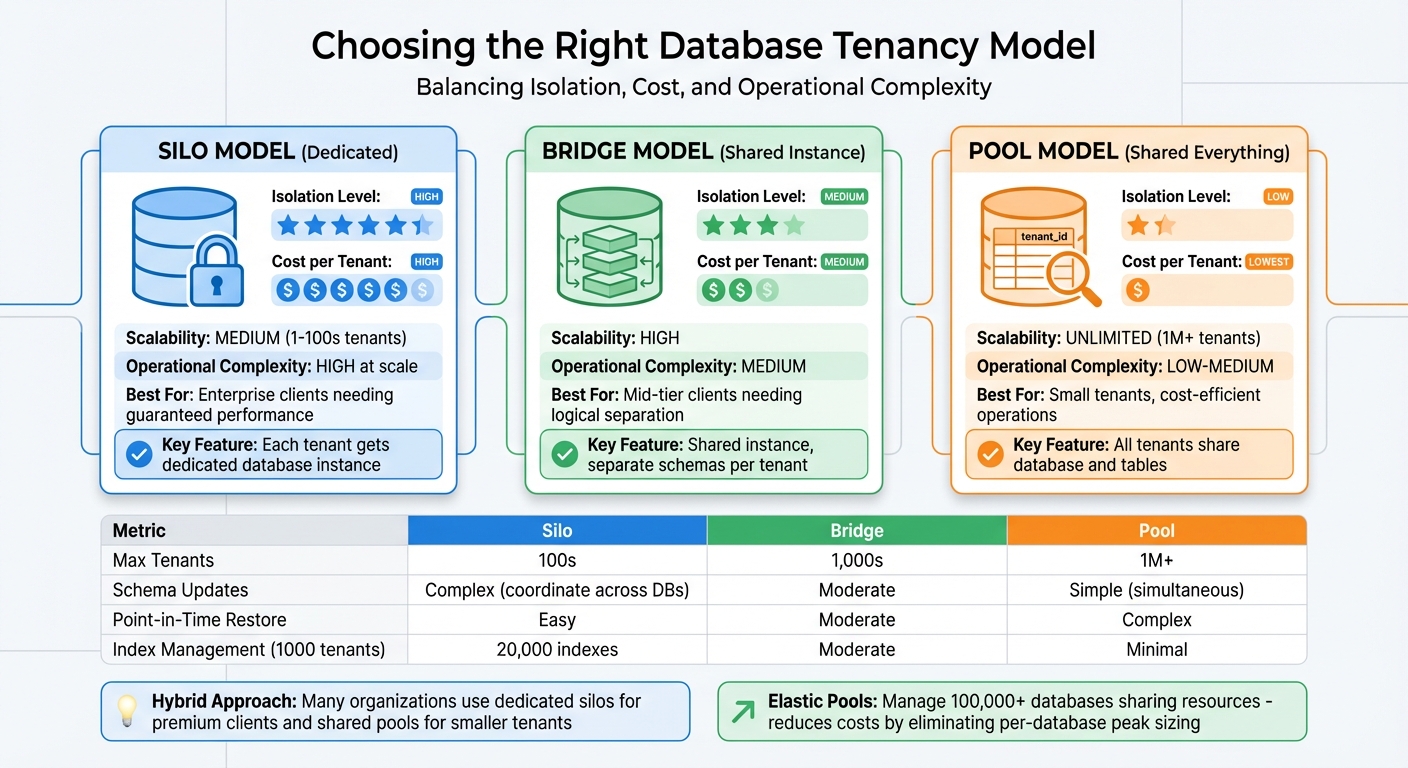

The way a database is structured plays a big role in managing costs and ensuring tenant performance. Striking the right balance between isolation, cost, and complexity requires a clear understanding of the trade-offs between various database models.

Deciding on the right database tenancy model is essential for optimizing both cost and performance. There are three main models - Silo, Bridge, and Pool - each offering a different balance of isolation, cost, and complexity.

tenant_id column used to differentiate their data. This approach is cost-efficient but sacrifices isolation.

Many organizations use a hybrid approach, where high-traffic or premium clients are placed in dedicated silos, while smaller tenants are consolidated into shared pools. For those using a database-per-tenant model, elastic pools are a game-changer. They allow multiple databases to share resources, reducing costs by eliminating the need to size each database for its peak load. For example, Azure SQL Database elastic pools can manage over 100,000 databases, making this method scalable even for large operations. Similarly, serverless options like Aurora Serverless automatically scale compute resources, cutting costs during idle periods.

Operational complexity varies across these models. In a dedicated setup with 1,000 tenants, each having 20 indexes, managing 20,000 indexes becomes a massive task - one that often requires automated tools. Schema updates in dedicated models can be cumbersome, requiring coordination across many databases, while shared models enable simultaneous updates but carry a higher risk of issues. Additionally, point-in-time restores are straightforward in dedicated databases but far more complex in shared setups, where isolating specific tenant data from a backup can be challenging.

| Tenancy Model | Isolation Level | Cost per Tenant | Scalability | Operational Complexity |

|---|---|---|---|---|

| Standalone (Silo) | High | High | Medium (1–100s) | High at scale |

| Database-per-tenant | High | Low (with pools) | High (100,000+) | Low-Medium |

| Sharded Multi-tenant | Low | Lowest | Unlimited (1M+) | High for individual management |

These considerations set the stage for strategies to address performance challenges.

The "noisy neighbor" issue arises when one tenant's heavy resource usage - whether CPU, memory, or I/O - impacts the performance of others sharing the same infrastructure. To tackle this, start by establishing a baseline for normal resource usage per tenant. Monitoring telemetry can help identify spikes or failures linked to specific tenants' activity patterns. Even small workloads can create bottlenecks if their peaks align.

Throttling and rate limiting are effective ways to prevent a single tenant from monopolizing resources. For example, you can limit query execution times, cap the number of records returned, or schedule heavy workloads during off-peak hours to free up resources for critical tasks.

Transparency is key - clients should be informed about throttling mechanisms or usage quotas so they can handle failed requests gracefully.

In database-per-tenant models, elastic pools offer a practical solution by isolating performance through pool-level settings while sharing resources among multiple databases. These pools can host up to 1,000 databases and scale to millions of tenants by using multiple pools. For shared databases, Row-Level Security (RLS) ensures that queries only return data for the relevant tenant, safeguarding both privacy and performance.

Other strategies include tenant rebalancing and API throttling, which optimize resource allocation by grouping tenants with complementary workloads on the same shard. Offering tiered isolation is another option, allowing high-traffic tenants to upgrade to dedicated resources by moving them to a silo model. Infrastructure-level controls, such as Kubernetes pod limits or Azure Service Fabric governance, can enforce resource boundaries. However, as Microsoft points out:

"Sharing a single resource inherently carries the risk of noisy neighbor problems that you can't completely avoid".

These database-level measures work hand-in-hand with broader automation and AI-driven approaches to manage costs effectively.

Managing costs in multi-tenant environments can be a daunting task, especially when dealing with hundreds or even thousands of tenants. Manual methods simply can't keep up. That's where automation and AI step in, simplifying processes and helping teams move from constantly putting out fires to proactively managing budgets.

Autoscaling ensures resources match tenant demand, scaling up during busy periods and down during lulls. But it doesn't stop there. Automated systems can also handle tasks like applying savings plans, decommissioning unused resources, and fine-tuning configurations - all without manual effort.

Governance tools play a crucial role too. For instance, they can enforce tagging policies, ensuring that every resource includes required tags like "tenant-id" or "cost-center." This makes every dollar spent traceable. Regular automated scans further help by identifying orphaned resources - those no longer in use but still racking up charges. Budget alerts at key thresholds (like 90%, 100%, and 110%) can also catch potential overspending before it spirals out of control.

These automated processes lay the groundwork for even more in-depth cost analysis through AI.

AI tools take cost management to the next level by providing detailed insights into spending patterns. They continuously monitor expenses, comparing current hourly usage against up to two years of historical data to define what "normal" spending looks like. If spending deviates from these baselines, anomaly alerts are triggered.

Machine learning also predicts future costs by analyzing historical trends, flagging potential overruns before they happen. A standout example is CloudZero, which oversees more than $14 billion in infrastructure spending. Pete Rubio, SVP of Platform and Engineering at Rapid7, highlighted its impact:

CloudZero has been a game-changer for us in managing the rapidly growing costs of our AI initiatives... It's not just about saving money - it's about enabling innovation while maintaining financial accountability and control.

CloudZero goes even further by calculating precise costs down to the tenant, feature, or even individual AI token level. This granular visibility is vital for SaaS companies looking to protect their margins. Josh Collier, FinOps Lead at Superhuman, explained:

CloudZero has been instrumental in providing the granular visibility we need to optimize our AI infrastructure costs while maintaining our service quality.

NanoGPT offers a great example of cost-efficient operations in multi-tenant platforms. Their pay-as-you-go pricing model eliminates the waste of fixed-price plans, especially for workloads that fluctuate. With a minimum cost of $0.10 and no subscription fees, users only pay for what they use - whether it's ChatGPT, Deepseek, Gemini, Flux Pro, Dall-E, or Stable Diffusion.

This model relies on automated scaling to adjust resources in real time. During high-demand periods, resources ramp up to maintain performance, while during quieter times, they scale down to save costs. Additionally, by storing data locally on users' devices instead of a centralized system, NanoGPT reduces operational expenses and enhances privacy - two benefits that directly contribute to better cost management.

NanoGPT's approach shows how automation and AI-powered tools can align tenant costs with actual usage, making it easier for both providers and users to maintain financial control.

When your tenant base grows, the way you scale your infrastructure can significantly influence both performance and costs. The two primary scaling strategies - horizontal and vertical - offer distinct trade-offs that require thoughtful planning.

Horizontal scaling, often called "scaling out", involves adding more resource instances, like web servers or database shards. This method offers a higher capacity ceiling and greater flexibility as your tenant base expands. It’s often more cost-efficient because you can use multiple lower-cost instances instead of investing in expensive, high-performance hardware.

Vertical scaling, or "scaling up", boosts the power of existing resources by upgrading to higher service tiers - adding more CPU or RAM to a single instance. While simpler to implement, this approach has a clear limitation: you’re constrained by the maximum capacity of a single resource. Additionally, higher-tier services tend to be more expensive per unit of computing power.

Microsoft provides a clear recommendation for scaling efficiently:

"The goal of cost optimizing scaling is to scale up and out at the last responsible moment and to scale down and in as soon as it's practical".

In simpler terms, this means scaling out only when absolutely necessary and scaling back down as soon as demand decreases.

For multi-tenant systems, horizontal scaling is generally more cost-effective and reliable. Azure, for instance, supports up to 800 resources of the same type within a single resource group. For larger deployments, planning to scale across multiple resource groups becomes essential. Using a bin packing strategy - where tenants are allocated to resources in a way that maximizes utilization - can help you get the most out of your infrastructure before scaling out.

Stateless components, such as application nodes without session dependencies, are much easier to scale horizontally than stateful ones. By offloading transient state to external services like Azure Managed Redis, you can ensure your application nodes remain stateless and ready to scale. Stateful components, however, often require session affinity, complicating load balancing and limiting scaling options.

Once your infrastructure is scalable, the next step is aligning subscription plans to tenant usage for better cost management.

As tenant numbers grow, your subscription plans need to reflect actual usage to keep costs in check. Tiered pricing models, such as Basic, Pro, and Premium, or hybrid pricing approaches, align infrastructure costs with tenant needs. This ensures that revenue grows predictably with your expanding user base. Higher-tier plans can support more expensive, resilient infrastructure by offering enhanced service guarantees, like 99.99% uptime instead of 99.9%.

The pricing structure itself is equally important. Consumption-based (pay-as-you-go) pricing ties revenue directly to infrastructure usage, but it can lead to unpredictable income. Flat-rate pricing, on the other hand, simplifies budgeting for customers but risks heavy users consuming more resources than expected. Many providers address this by combining a base fee with usage overages, creating a hybrid model that stabilizes revenue while capturing value from growth.

Monitoring per-tenant cost of goods sold (COGS) is critical to identifying tenants who might be eroding your margins. For larger tenants, capacity reservations - where they pay upfront for a baseline level of usage in exchange for discounts - can provide predictable revenue streams, making it easier to fund infrastructure investments.

Managing costs in a multi-tenant environment requires ongoing visibility, smart automation, and timely actions. Start by establishing a consistent system for tagging assets - such as by tenant ID, workload, or department - and organize resources hierarchically to ensure accurate tracking of consumption.

Consider using showback or chargeback models to allocate resource costs to specific teams effectively. Set budget alerts at key thresholds like 90%, 100%, and 110%, paired with forecast alerts to stay ahead of potential overspending. Automation tools can further streamline this process by detecting anomalies, enforcing tagging policies, and addressing usage spikes. Regularly decommission unused resources and adjust instance sizes to match real demand, ensuring resources are used efficiently.

To take it a step further, align your pricing model with your cost structure. For instance, in some SaaS setups, services like Amazon S3 might make up just 1% of the total bill. Focus your tracking and optimization efforts on higher-cost components to maximize your return on investment. As highlighted earlier, continuous optimization - not occasional reviews - is key to sustainable cost management. By combining clear visibility, automated controls, and accountability, you can scale your operations efficiently while protecting your margins.

To keep track of and manage costs for each tenant effectively, start by tagging all cloud resources - like EC2 instances, S3 buckets, or Azure VMs - with a unique identifier for each tenant. This tagging system lets you link usage metrics, such as compute time, storage, or network traffic, directly to individual tenants. Then, use your cloud provider's cost management tools to allocate expenses based on these tags and create detailed reports for each tenant. For shared resources, distribute costs fairly, using a method like splitting expenses proportionally based on usage.

Set up dashboards and alerts within your billing tools to keep an eye on tenant-specific spending in real-time. Activate cost allocation tags, establish tenant-specific budgets, and configure alerts for unexpected usage spikes so you can respond quickly. Additionally, export usage data to a centralized analytics platform to examine trends, compare tenant activities, and fine-tune your pricing strategies. By combining automated tagging, accurate cost allocation, and proactive monitoring, you’ll maintain clear and precise cost management for all tenants.

To avoid over-provisioning, start by tagging all resources, such as workloads, tenants, and environments. This step links usage to specific business units or cost centers, giving you clear cost visibility. Pair this with a cost-allocation model to create detailed reports that show teams exactly how their resource usage translates into financial impact.

Next, focus on right-sizing and auto-scaling. Monitor key metrics like CPU, memory, and I/O usage, and set automated policies to scale resources up or down as demand changes. Regularly review your resource allocation - whether monthly or quarterly - and adjust instance sizes to better match actual usage patterns. Using consumption-based pricing models and applying cost-saving strategies can also help minimize waste.

Lastly, enforce quota limits and approval workflows for new resource requests. By requiring justification for provisioning, you can ensure that decisions align with both technical requirements and budgetary goals. When combined, these practices make it easier to control costs without compromising performance for tenants.

Automation and AI have become key players in keeping costs under control on multi-tenant platforms. Tools like budget alerts, usage-based tagging, and real-time reporting make it easier to spot overspending and address it right away. For example, you can scale down unused resources or implement cost-saving measures without delay. These capabilities not only help manage expenses but also ensure that operations run smoothly.

AI takes this a step further by analyzing usage trends, predicting future demand, and flagging unusual activity. It can suggest practical adjustments, like resizing resources to better fit needs or moving workloads to more affordable solutions. Together, automation and AI give tenants a clear, real-time view of their spending while enabling providers to apply cost-saving policies automatically. The result? A system that balances performance and budget control, all while staying scalable and efficient.