Aug 4, 2025

Large Language Models have a fundamental limitation: the context window. When conversations get long, models become slow, lose track, or error out entirely.

Context Memory solves this problem. It keeps conversations and coding sessions super snappy and allows them to continue indefinitely while maintaining full awareness of the entire conversation history.

Current memory solutions like ChatGPT's memory store general facts but miss something critical: the ability to recall specific events at the right level of detail.

Without this, you get:

Context Memory creates a hierarchical structure of your conversation:

Here's an example from a coding session:

Token estimation function refactoring

|-- Initial user request

|-- Refactoring to support integer inputs

|-- Error: "exceeds the character limit"

| +-- Fixed by changing test params from strings to integers

+-- Variable name refactoring

When you ask "What errors did we encounter?", Context Memory expands the relevant section while keeping other parts collapsed. The model that you're using (like ChatGPT or Claude) gets the exact detail needed without information overload.

For Developers:

For Agentic Use Cases:

For Roleplay & Storytelling:

For Conversations:

Turn on Context Memory as early as possible in your conversation! Context Memory progressively indexes your conversation each time, building up a comprehensive understanding. This means:

The earlier you enable it, the more complete your memory will be.

Add :memory to any model name:

curl -X POST https://nano-gpt.com/api/v1/chat/completions \

-H "Authorization: Bearer $NANOGPT_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-4o-mini:memory",

"messages": [

{ "role": "user", "content": "Hi" },

{ "role": "assistant", "content": "Hi there! How are you?" },

{ "role": "user", "content": "Please help me set up...." }

]

}'

Or use the memory header:

curl -X POST https://nano-gpt.com/api/v1/chat/completions \

-H "Authorization: Bearer $NANOGPT_API_KEY" \

-H "Content-Type: application/json" \

-H "memory: true" \

-d '{

"model": "gpt-4o",

"messages": [

{ "role": "user", "content": "Hi" },

{ "role": "assistant", "content": "Hi there! How are you?" },

{ "role": "user", "content": "Please help me set up...." }

]

}'

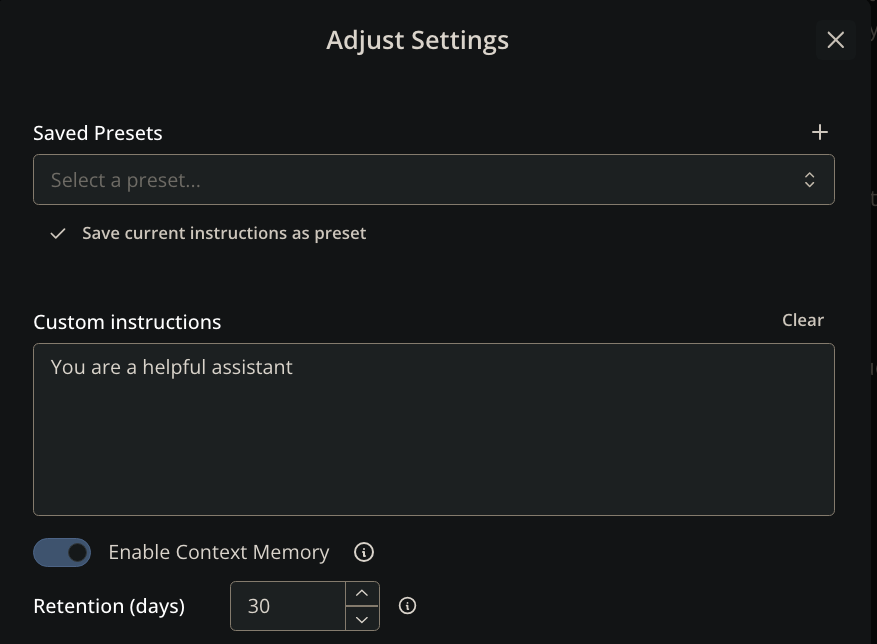

By default, Context Memory retains your compressed chat state for 30 days. Retention is rolling and based on the conversation’s last update: each new message resets the timer, and the thread expires N days after its last activity. You can configure retention from 1 to 365 days:

:memory-<days>curl -X POST https://nano-gpt.com/api/v1/chat/completions \

-H "Authorization: Bearer $NANOGPT_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-4o-mini:memory-90",

"messages": [

{ "role": "user", "content": "Persist this conversation for 90 days" }

]

}'

memory: true and memory_expiration_days: <1..365>curl -X POST https://nano-gpt.com/api/v1/chat/completions \

-H "Authorization: Bearer $NANOGPT_API_KEY" \

-H "Content-Type: application/json" \

-H "memory: true" \

-H "memory_expiration_days: 45" \

-d '{

"model": "gpt-4o-mini",

"messages": [

{ "role": "user", "content": "Retain for 45 days via header" }

]

}'

Note: If both suffix and header are provided, the header value for memory_expiration_days takes precedence for retention. Combined features still follow suffix parsing (e.g., :online).

Combine with web search:

# Memory + Web Search

curl -X POST https://nano-gpt.com/api/v1/chat/completions \

-H "Authorization: Bearer $NANOGPT_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-4o:online:memory",

"messages": [

{ "role": "user", "content": "Hi" },

{ "role": "assistant", "content": "Hi there! How are you?" },

{ "role": "user", "content": "Please search for and help me set up...." }

]

}'

Context Memory uses your conversation messages as the identifier. This means:

The system compresses long histories without losing information. Everything is preserved at the appropriate level of detail.

What happens behind the scenes:

This means you can have conversations with millions of tokens of history, but the AI model only sees the intelligently compressed version that fits within its context window.

Privacy with Polychat: When using Context Memory, your conversation data is processed by Polychat's API which uses Google/Gemini in the background with maximum privacy settings.

You can review Polychat's full privacy policy at https://polychat.co/legal/privacy.

Important privacy details:

:memory-<days> or memory_expiration_days headerContext Memory is available now:

:memory to any model namememory: true header:onlineThe Usage page shows your Memory usage, token counts, and costs. For step-by-step examples, use the code snippets above.

Whether you're coding, researching, or having long conversations, Context Memory ensures your AI remembers everything that matters.