Jan 5, 2026

Managing tasks in Edge AI systems is about balancing limited resources (like CPU and battery) with strict deadlines. Why? Because tasks like face recognition or voice commands need to happen fast and on time - especially in critical areas like autonomous vehicles or augmented reality. Missing a deadline isn’t just inconvenient - it can lead to system failures or safety risks.

Here’s what you need to know:

The bottom line? Scheduling in Edge AI is all about meeting deadlines while working within tight resource constraints. Whether it’s autonomous driving or IoT sensors, the right scheduling approach can make or break the system’s performance.

In recent years, deadline-aware scheduling for edge AI has seen significant progress, with researchers introducing algorithms and frameworks that go beyond theory to deliver measurable results in practical settings.

One notable development came in December 2019 with the creation of the DeEdge testbed. This platform was designed to evaluate the Dedas algorithm using real-world data traces from Google clusters. By running latency-sensitive applications like face matching and IoT sensor monitoring, the testbed demonstrated that Dedas could reduce deadline miss ratios by 60%, all while maintaining online stability. This success highlighted how a greedy scheduling approach combined with task replacement logic could better handle the unpredictable nature of edge environments compared to traditional methods. This marked a turning point for advancing methodologies in deadline-aware scheduling.

Fast-forward to October 2023, and another breakthrough emerged with the MARINA mechanism, developed by Joahannes B. D. da Costa and his team. Designed for vehicular edge computing (VEC) - a domain where vehicles frequently move in and out of network coverage - MARINA leverages an LSTM architecture to predict resource availability in Vehicular Clouds based on mobility patterns. Simulations showed that MARINA significantly increased the number of scheduled tasks while reducing both system latency and monetary costs. As da Costa explained:

The tasks' deadline constraints can be better observed and considered from a resource availability estimation in the VCs, making it possible to infer the processing time and the service probability to increase the scheduled and completed tasks rate.

Deep Reinforcement Learning (DRL) has also proven effective in managing dependent tasks. A DDPG-based algorithm demonstrated its ability to reduce the deadline violation ratio by 60.34%–70.3% compared to baseline methods, particularly in handling DAG-modeled dependent tasks, a common challenge in autonomous driving systems.

Another key innovation is the Edge Generation Scheduling (EGS) framework, which focuses on minimizing DAG width through iterative edge generation while adhering to deadline constraints. By integrating Graph Representation Neural Networks, EGS has outperformed traditional Mixed-Integer Linear Programming (MILP) methods, achieving the same task scheduling with fewer processors. Meanwhile, new job scheduling algorithms have tackled computational overhead, reducing it from factorial complexity to linearithmic O(n log n) for static tasks and O(n) for online arrivals.

The field is increasingly embracing AI-driven prediction models to anticipate resource fluctuations before they occur. Techniques like Recurrent Neural Networks (RNNs) and LSTMs are becoming essential for forecasting vehicle mobility and resource availability in dynamic environments. This forward-looking approach enables smarter task placement decisions, reducing disruptions and improving deadline adherence.

Another trend gaining momentum is on-demand inference scheduling with multi-exit Deep Neural Networks (DNNs). These systems allow edge devices to adjust the quality of service based on the remaining time, striking a balance between inference accuracy and meeting deadlines. Yechao She, a researcher in this area, emphasizes:

To provide edge inference service with quality assurance, a task scheduler is necessary to coordinate the computational resources of the edge server with the demands of the tasks.

Decentralized offloading is also becoming popular, shifting from centralized dispatchers to agent-based models on mobile devices. This approach reduces decision-making latency, enabling quicker responses to changing conditions. Additionally, researchers are incorporating monetary cost models into their scheduling systems. This ensures that these systems remain financially efficient, a critical factor in pay-as-you-go cloud environments.

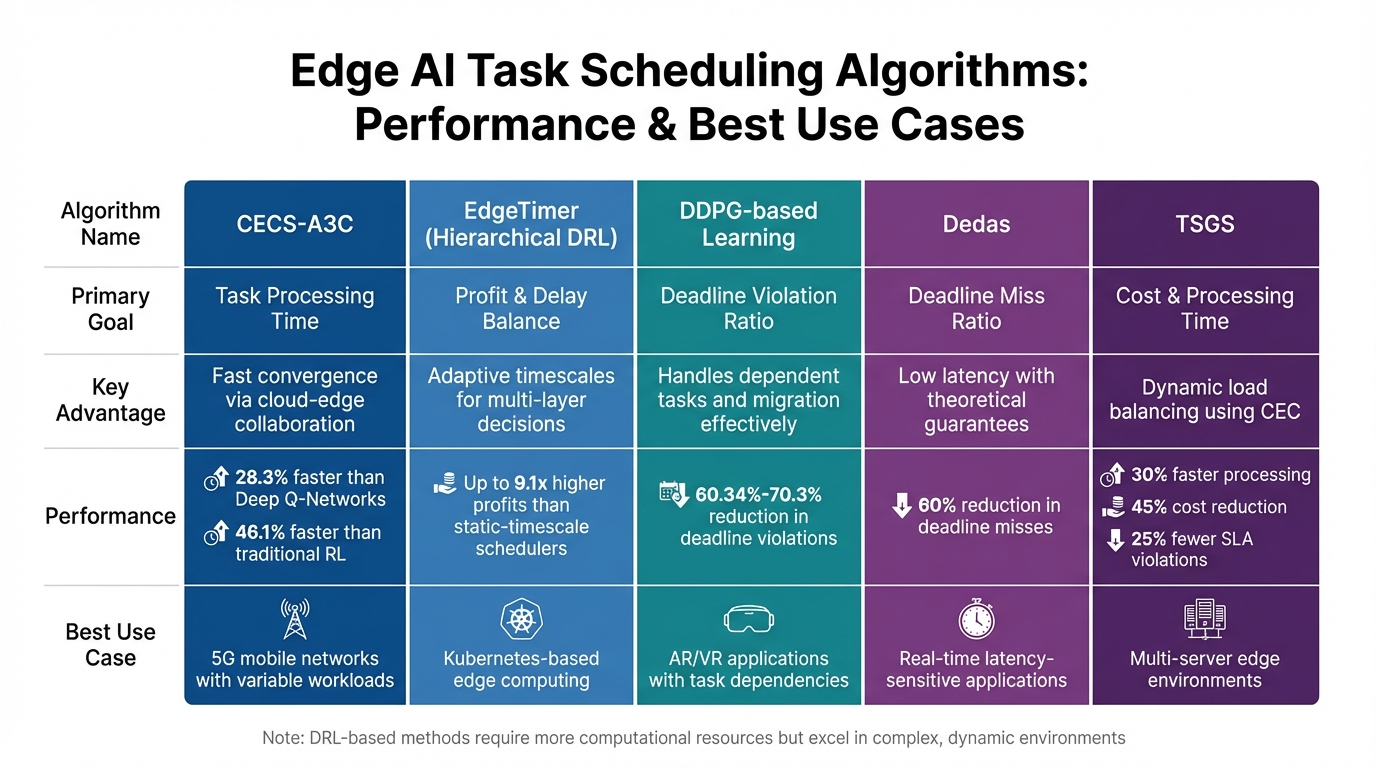

Comparison of Edge AI Task Scheduling Algorithms: Performance Metrics and Use Cases

Deep Reinforcement Learning (DRL) algorithms approach task scheduling by framing task arrivals and resource availability as a Markov Decision Process (MDP). This allows them to learn optimal scheduling strategies over time.

For instance, CECS-A3C, a DRL algorithm, has demonstrated impressive performance in 5G networks, cutting processing time by 28.3% compared to Deep Q-Networks and 46.1% compared to traditional reinforcement learning methods.

Another noteworthy example is EdgeTimer, a hierarchical DRL framework introduced in June 2024 by researchers Yijun Hao and Shusen Yang. Designed for Kubernetes-based mobile edge computing, EdgeTimer breaks down scheduling decisions into smaller, manageable layers. When tested with production data, it delivered up to 9.1x higher profits than static-timescale schedulers, all while maintaining the same delay performance.

Similarly, a DDPG-based algorithm has proven effective in reducing the deadline violation ratio by 60.34% to 70.3% across different scenarios. These advancements highlight DRL’s potential in tackling complex scheduling challenges, including dependent-task scheduling.

While DRL methods showcase their ability to adapt and optimize in dynamic environments, they also demand significant computational resources. This makes them particularly suited for edge AI applications where complexity and variability are high. Next, we’ll look at serverless scheduling, which takes a different approach to manage these challenges.

Serverless architectures operate on the principle of treating tasks as ephemeral functions. This shifts the focus of scheduling from static resource allocation to dynamic, runtime-based adjustments. Traditional scheduling methods like Rate-Monotonic or Earliest Deadline First work well when task sets are predictable, offering strong guarantees for execution timing. However, these methods often fall short in handling the dynamic and unpredictable workloads typical of edge environments.

Serverless scheduling, on the other hand, thrives in such conditions by leveraging feedback control and predictive modeling. A good example is the Dynamic Time-Sensitive Scheduling algorithm with Greedy Strategy (TSGS). This approach achieves significant improvements, reducing average processing time by 30%, cutting costs by 45%, and lowering SLA violations by 25%. TSGS relies on Comprehensive Execution Capability (CEC) to dynamically balance loads.

Energy efficiency is another critical aspect of serverless scheduling. The Flexible Invocation-Based Deep Reinforcement Learning (FiDRL) framework addresses this by incorporating Dynamic Voltage and Frequency Scaling (DVFS). FiDRL reduces energy overhead by 55.1%, though it comes with a tradeoff: adaptivity can weaken the strict timing guarantees required by traditional real-time systems. As researcher Abdelmadjid Benmachiche puts it:

While adaptivity enhances responsiveness to change, it inherently weakens the strong timing guarantees that traditional real-time systems rely on for safety certification.

Different scheduling algorithms cater to varying needs, making it essential to choose the right approach based on the specific requirements of your edge AI deployment. The table below summarizes key methods and their strengths:

| Algorithm/Method | Primary Goal | Key Advantage | Best Use Case |

|---|---|---|---|

| CECS-A3C | Task Processing Time | Fast convergence via cloud-edge collaboration | 5G mobile networks with variable workloads |

| EdgeTimer (Hierarchical DRL) | Profit & Delay Balance | Adaptive timescales for multi-layer decisions | Kubernetes-based edge computing |

| DDPG-based Learning | Deadline Violation Ratio | Handles dependent tasks and migration effectively | AR/VR applications with task dependencies |

| Dedas (Deadline-Aware Scheduling) | Deadline Miss Ratio | Low latency with theoretical guarantees | Real-time latency-sensitive applications |

| TSGS | Cost & Processing Time | Dynamic load balancing using CEC | Multi-server edge environments |

The choice of scheduling method depends on your deployment’s specific constraints. DRL-based approaches excel in handling complex task dependencies, making them ideal for applications like autonomous driving or augmented reality. For environments where cost is a key concern, methods like TSGS or EdgeTimer shine by optimizing financial efficiency. Meanwhile, CECS-A3C is a strong candidate for unpredictable 5G network scenarios.

However, it’s worth noting that DRL-based methods require more computational resources than traditional schedulers, as the learning agents themselves consume significant memory and processing power. This makes them less suitable for resource-constrained edge devices. Traditional methods may still be the better choice for simpler, more predictable workloads. As one IEEE report succinctly states:

Edge computing has become the key technology of reducing service delay and traffic load in 5G mobile networks. However, how to intelligently schedule tasks in the edge computing environment is still a critical challenge.

Recent evaluations provide valuable insights into how deadline-aware scheduling algorithms perform when deployed in real-world edge AI systems.

In edge AI systems, periodic tasks often take the form of Directed Acyclic Graphs (DAGs), which are particularly common in industries like automotive and avionics. These tasks consist of interconnected operations that must be completed within strict time limits, making response-time bounds a critical factor.

Recent research has focused on minimizing the width of DAGs through iterative edge generation. This approach reduces the number of processors required and ensures that dependent tasks meet their deadlines. For applications like autonomous driving, where timing is crucial, maintaining predictable response times is essential to avoid delays that could jeopardize safety.

Another key aspect is managing the balance between accuracy and meeting deadlines. Early-exit Deep Neural Networks allow schedulers to adjust the quality of results dynamically based on available time. In scenarios where resources are limited, this ensures that a timely - though potentially less accurate - result is delivered, avoiding the risk of missing critical deadlines altogether.

These considerations around timing and accuracy naturally tie into the economic challenges of managing multi-tier edge environments.

Multi-tier edge computing introduces the challenge of balancing performance with cost. One effective approach is the M-TEC framework, tested in September 2024 by Xiang Li and colleagues from Purdue on a commercial CBRS 4G network. This framework demonstrated that using smartphones and tablets as active nodes reduced latency by 8%. It emphasizes cost-effective edge-only scheduling, relying on cloud offloading only when local deadlines cannot be met.

Further enhancing cost efficiency, the RL-MOTS framework leverages Deep Q-Networks to optimize energy usage and operational expenses. This method reduces energy consumption by 28% and improves cost efficiency by 20% compared to traditional approaches. Considering that data centers account for roughly 1% of global electricity consumption, these improvements are particularly impactful in reducing operational costs and environmental impact.

The scheduling methods we’ve explored bring real-world advantages to a variety of edge AI scenarios, each with unique challenges and trade-offs.

Mobile edge computing thrives on deadline-aware scheduling. For instance, DDPG-based algorithms can slash the Deadline Violation Ratio by as much as 70.3%. In vehicular edge computing, LSTM-based predictions and hybrid regional controllers ensure smooth task execution, even under fluctuating network coverage. This is especially vital for applications like augmented reality and real-time video processing, where even minor delays can disrupt the user experience.

The Dedas online algorithm demonstrates its value by cutting the deadline miss ratio by up to 60% while also reducing average task completion time. Meanwhile, preemption-aware scheduling systems excel in environments requiring fast decision-making, achieving a 99% completion rate for high-priority tasks.

Scheduling also plays a major role in improving energy efficiency for devices with limited power. Energy-harvesting systems, which rely on intermittent power sources, must balance low energy consumption with strict deadlines. The RL-MOTS framework, for example, reduces energy usage by up to 27% while still meeting deadline requirements through adaptive reward functions. Similarly, the Zygarde framework uses "imprecise computing" to schedule 9% to 34% more tasks and delivers up to 21% higher inference accuracy on batteryless devices.

"The sporadic nature of harvested energy, resource constraints of the embedded platform, and the computational demand of deep neural networks (DNNs) pose a unique and challenging real-time scheduling problem." - Bashima Islam and Shahriar Nirjon

Another key factor is the energy overhead caused by scheduling itself. The FiDRL framework highlights the importance of optimizing this overhead, reducing agent invocation energy by 55.1%. For ultra-constrained microcontrollers, it’s critical that the energy savings from the scheduler outweigh the energy it consumes.

Scheduling advancements also enhance security and efficiency in blockchain-enabled edge AI systems. Meeting deadlines is essential for time-sensitive tasks like consensus participation or threat detection, where delays can lead to stale data or system vulnerabilities. DRL-based methods ensure deadlines are met, which is crucial for maintaining the integrity of blockchain operations.

Hybrid architectures further improve scalability by spreading scheduling decisions across regional controllers instead of relying on centralized systems. This reduces the burden of message exchanges and prevents overloading the network core. In blockchain systems, where node churn (e.g., vehicles moving out of range) can disrupt validation, LSTM-based resource prediction identifies stable nodes for task execution.

Efficiency is equally important for real-time blockchain tasks. Scheduling algorithms with linearithmic O(n log n) or linear O(n) time complexity ensure the scheduling process itself doesn’t become a bottleneck. Additionally, priority-based preemption mechanisms ensure critical security tasks are completed, even under heavy workloads, achieving a 99% completion rate for high-priority operations.

Dynamic task scheduling with deadlines is becoming a cornerstone for ensuring the reliability of edge AI systems. Recent research has shown that deep reinforcement learning (DRL)-based scheduling strategies can significantly cut system deadline violation ratios - by 60.3% to 70.3% - according to studies. Similarly, online algorithms like Dedas have achieved up to a 60% reduction in deadline misses. These advancements are critical in distinguishing dependable systems from those more likely to fail.

Looking ahead, the next step for researchers is to tackle the challenge of integrating network and computing resource optimizations. Future systems will need to coordinate these resources effectively, especially when managing complex task dependencies represented as Directed Acyclic Graphs (DAGs) and incorporating preemption for high-priority workloads. To handle the demands of real-time, rapidly changing tasks, achieving algorithmic efficiencies with complexities as low as O(n) to O(n log n) will be crucial. For larger deployments, distributed approximation algorithms offer a scalable alternative, maintaining strong performance with less than a 10% drop compared to centralized methods.

Applications like autonomous vehicles, augmented reality, and energy-harvesting devices highlight the importance of meeting deadlines. In these fields, missing a deadline isn’t just inconvenient - it can compromise the entire system’s reliability. For developers and researchers, the message is clear: adopting DRL for task offloading, integrating preemption-aware scheduling, and using early-exit deep neural networks to balance quality and latency are all essential for building cutting-edge edge AI systems.

While scheduling in resource-limited environments remains an NP-hard problem, practical solutions are now delivering near-optimal real-time performance. As edge computing infrastructure evolves, these advancements in scheduling will open the door to applications and services that are only beginning to take shape.

Unfortunately, there isn't much information available about the Dedas or MARINA algorithms and their role in dynamic, deadline-aware task scheduling in Edge AI. Without further details or specific references, it's tricky to delve into how they work or what advantages they might offer.

If you have more context or resources about these algorithms, feel free to share them. I'd be glad to help break them down and provide a clearer explanation.

AI models are essential for predicting resource availability - like compute power, memory, and bandwidth - in edge AI systems. Take Deep Reinforcement Learning (DRL) agents, for example. These models evaluate the current workload on edge nodes and forecast resource availability for upcoming time slots. This capability enables them to adjust task scheduling on the fly, ensuring deadlines are met, even in unpredictable environments.

On the other hand, Long Short-Term Memory (LSTM) networks excel at spotting temporal trends in resource usage, such as recurring spikes in CPU or bandwidth demand. These models are often leveraged for short-term forecasts, improving the precision of scheduling decisions. When DRL policies are paired with LSTM-based predictions, edge AI systems can fine-tune task placement and uphold performance, even for workloads where low latency is critical.

Meeting deadlines is a non-negotiable aspect for systems like autonomous vehicles and IoT devices because they manage tasks where timing is everything, often with direct consequences for safety and overall functionality. Take autonomous vehicles, for instance - they need to process sensor data in mere milliseconds to make split-second decisions. Similarly, IoT devices frequently work under tight latency constraints to deliver accurate and timely responses.

Studies highlight that deadline-aware scheduling algorithms play a pivotal role in minimizing missed deadlines. These algorithms ensure that systems consistently meet service-level agreements (SLAs) and perform effectively within edge-computing environments. Their role is crucial in maintaining the reliability and performance that these applications demand.