Feb 26, 2025

Fake news spreads quickly and can mislead millions, especially on social media platforms where false political content spreads three times faster than true news. AI is becoming a critical tool in detecting fake news, offering methods like Natural Language Processing (NLP), visual detection, and social pattern analysis. However, to build trust, AI systems must be explainable, showing users why specific content is flagged.

Key advancements in explainable AI (XAI) for fake news detection include:

Explainable tools like the ExFake system and dEFEND demonstrate how transparency improves detection accuracy, user trust, and understanding. Challenges remain, such as biased data and high computational demands, but solutions like feature optimization and scalable models are paving the way for more effective systems.

With the rise of misinformation, explainable AI is essential for combating fake news while ensuring fairness and transparency.

Explainable AI (XAI) focuses on making AI decisions more transparent and easier to understand. Unlike black-box models that simply provide outputs, XAI reveals the reasoning behind its decisions. This is especially useful in detecting fake news, as it explains why content is flagged as suspicious.

"xAI proposes the development of machine learning techniques producing highly effective, explainable models which humans can understand, manage and trust" - Barredo Arrieta, A., et al.

These principles form the foundation for practical explanation methods, which are outlined below.

XAI employs various techniques to clarify how decisions are made. Here are the key methods used in fake news detection:

| Method | Purpose | Application in Fake News Detection |

|---|---|---|

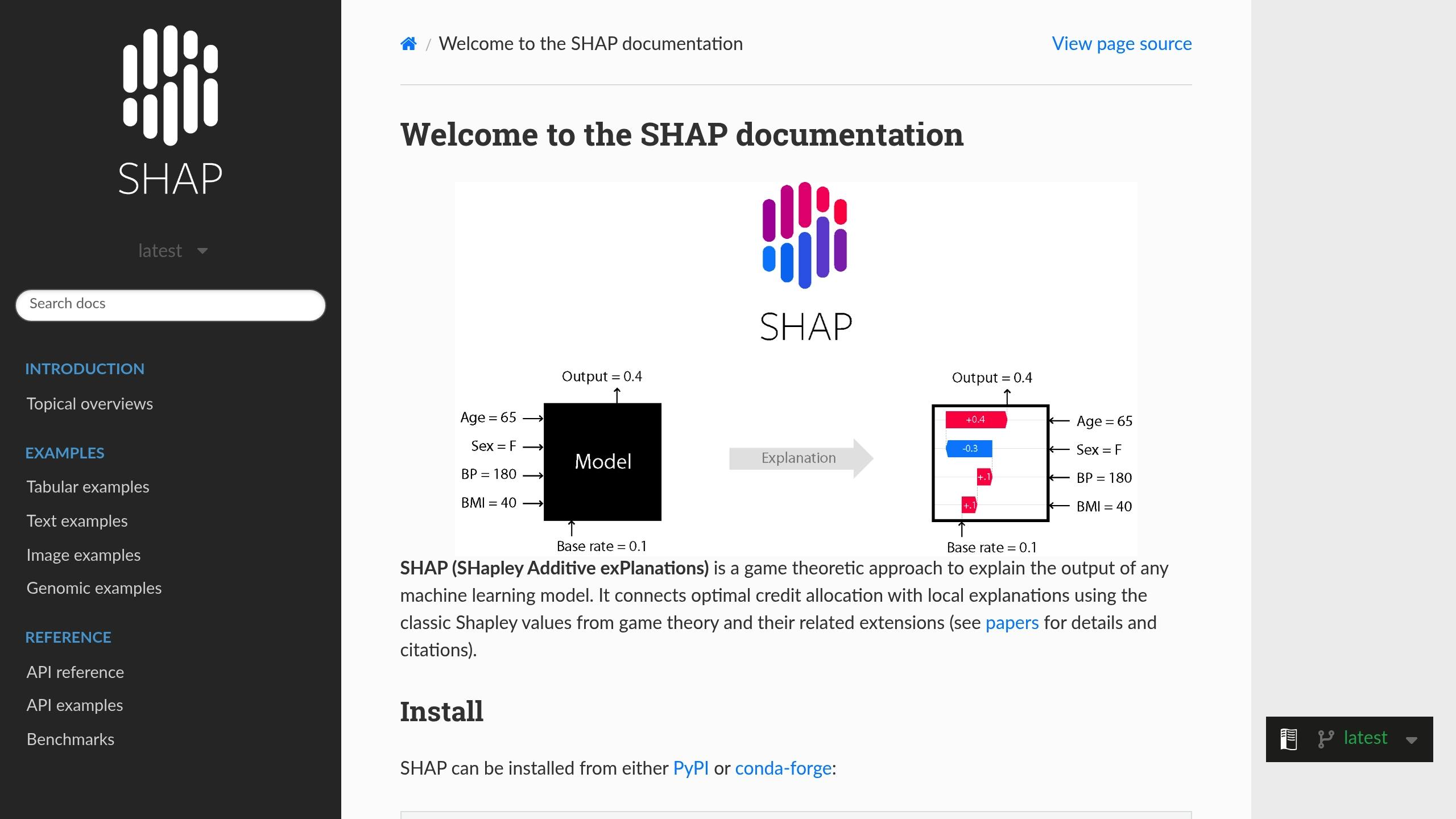

| SHAP (SHapley Additive Explanations) | Identifies feature importance | Pinpoints which parts of news content indicate misinformation |

| LIME (Local Interpretable Model-Agnostic Explanations) | Explains individual predictions | Highlights specific words or phrases that triggered detection |

| Attention Mechanisms | Focuses on relevant content areas | Visualizes suspicious patterns in text and metadata |

The xFake system applies these methods across three frameworks to analyze news from different angles. For instance, its PERT framework uses linguistic features and XGBoost classification, with perturbation-based analysis to measure feature importance .

XAI brings several improvements to fake news detection:

"Explainable AI offers a solution, ensuring that AI decisions are not just accepted but understood. Without transparency, AI risks reinforcing biases and eroding public confidence. The future of AI must prioritize explainability, turning black boxes into glass boxes. After all, if humans are expected to justify their judgments, why should AI be any different?" - Sarvotham Ramakrishna

Fake news detection systems rely on a mix of content analysis and social context tracking to identify misleading information effectively.

Here’s a breakdown of key framework components:

| Component | Purpose | Implementation Method |

|---|---|---|

| Content Analysis | Analyzes text and media for deceptive cues | Extracts important features for detection |

| Social Context | Monitors how news spreads | Uses network-based approaches |

| Named Entity Processing | Mitigates bias in detection | Categorizes and replaces entities via Named Entity Recognition (NER) |

| Feature Optimization | Boosts model accuracy | Removes irrelevant elements like URLs and emoticons |

For instance, a RoBERTa-based model showed a 39.32% improvement in F1-score after applying feature optimization and entity replacement .

As these systems develop, they encounter challenges that demand specific solutions.

One major issue is data quality. Content-based methods often struggle with biased training data. Removing non-informative elements, such as hashtags or URLs, helps models focus on meaningful content .

Another challenge is the computational demand of transformer-based models. While they deliver strong results, traditional classifiers with well-curated feature sets can offer similar performance with much lower resource requirements .

Scalability is also a pressing concern. Detection systems need to work across different platforms and languages. Solutions include model-agnostic explanation methods, synthetic data for testing, and localized interpretability techniques .

Advanced AI tools like NanoGPT enhance news verification by leveraging explainable AI for better results.

NanoGPT offers several advantages:

"Synthetic data enables a collaborative, broad setting, without putting the privacy of customers at risk. It's a powerful, novel tool to support the development and governance of fair and robust AI." - Jochen Papenbrock, Manager at NVIDIA, and Alexandra Ebert, Chief Trust Officer of MOSTLY AI

These advancements make NanoGPT a practical choice for improving fake news detection systems.

Recent advancements in explainable AI are reshaping how fake news is detected. For instance, dual-level visual analysis with multimodal transformers addresses the limitations of image-only evaluations. Meanwhile, Siamese networks excel at identifying subtle differences between genuine and fabricated text.

These developments build on earlier methods like content and social context analysis, now enhanced with multimodal approaches and advanced text representation techniques.

| Feature | Impact | Implementation Benefit |

|---|---|---|

| LLM Description Models | Better detection of false news | Deeper context understanding |

| Multimodal Analysis | Greater accuracy | Comprehensive content checks |

| Siamese Networks | Improved text analysis | Detection of nuanced patterns |

However, these advancements also demand rigorous ethical oversight.

The ethical challenges surrounding AI-driven news verification are significant. Research shows that 66% of Europeans encounter false information weekly , underscoring the urgency for dependable detection systems.

"One consumer may view content as biased that another may think is true. Similarly, one model may flag content as unreliable, and another will not. A developer may consider one model the best, but another developer may disagree. We think a clear understanding of these issues must be attained before a model may be considered trustworthy."

Key ethical considerations include:

To address existing gaps, future work must focus on both ethical and technical challenges.

"At this point in history, with the rampant spread of misinformation and the polarization of society, stakes could not be higher for developing accurate tools to detect fake news. Clearly, we must proceed with caution, inclusiveness, thoughtfulness, and transparency." - Chanaka Edirisinghe, Ph.D.

Key areas for further development include:

Recent experiments have shown notable performance improvements across multiple datasets .

Explainable AI is proving to be a powerful ally in tackling fake news. Research highlights that over half of adult Americans depend on social media for news, making these platforms a major source of misinformation .

Here are some key advancements in explainable AI for detecting fake news:

| Advancement | Impact | Current Status |

|---|---|---|

| Surrogate Methods | Enables quick deployment without altering model architecture | Successfully applied in BERT-based systems |

| Multi-method Analysis | Improves pattern detection | Effective in spotting subtle deception |

| Named Entity Recognition | Reduces bias in data processing | Actively used during preprocessing |

Tools like dEFEND showcase the potential of explainable AI by using co-attention mechanisms to analyze the connection between news content and user interactions. This is especially important, given that humans correctly identify deception only 54% of the time .

These developments pave the way for more advanced AI tools to verify news effectively.

To build on the progress made, the next phase involves improving transparency and optimizing model performance.

Here’s how to move forward: