Oct 13, 2025

LIME and SHAP are tools designed to make AI decisions easier to understand. They provide insights into how AI models arrive at their conclusions, addressing concerns about transparency, fairness, and compliance with regulations. Here's what you need to know:

Both tools are widely used in areas like healthcare, finance, and image generation to clarify AI outputs, build trust, and ensure compliance. Choosing between them depends on your needs: speed and simplicity (LIME) or depth and reliability (SHAP).

Two ways, LIME and SHAP, make AI choices clearer. These help change hard AI actions into easy ideas for people, bridging space between high-tech models and their users.

Both tools do not tie to one model, they can work with many AI types. With more folks asking for clearness on how their data is used, tools like LIME and SHAP are key to clear up AI choices when person data is at play.

LIME clears up AI calls by looking at each choice up close. It looks at how little tweaks in data change the result, showing details in the "local" spot close to a choice.

Here is how it runs: LIME builds a simple, easy model for the choice you want to know. It changes bits of input and sees how these switch the AI's choice. Say, deleting some words in an email, LIME checks how the spam score moves.

Since LIME sees the AI as a closed box - only looking at how bits go in and come out - it fits various models, from big brain nets to tree choices. But, you must use LIME new each time to get ideas on different calls.

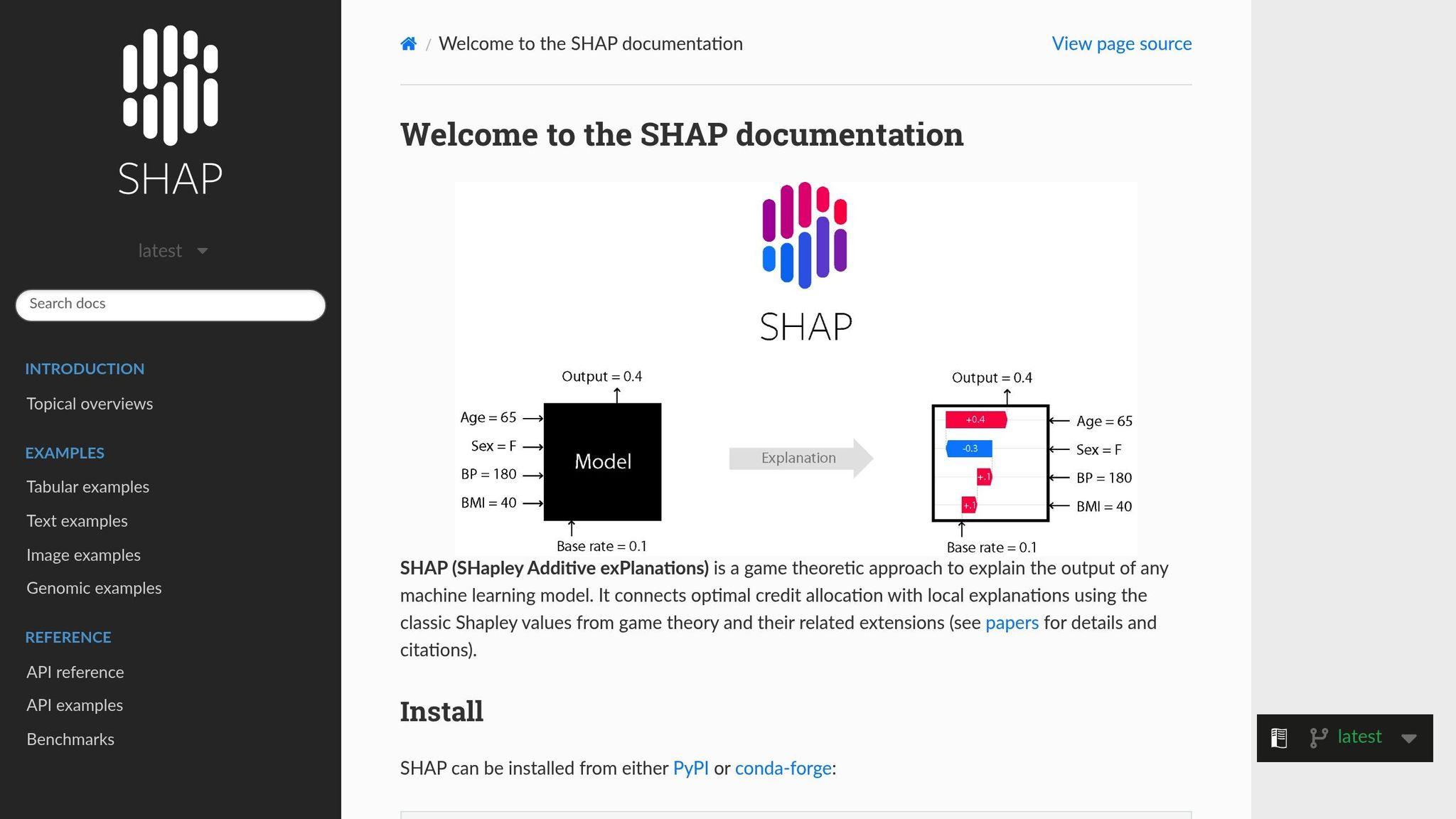

SHAP uses game logic. It draws on Shapley values, first made to share wins fair among helpers, to clear AI calls. Here, SHAP sees each input bit as a "player" in a game, figuring how much it adds to the end choice.

SHAP clears this: "How much did this bit change the AI's mind?" Not like LIME, SHAP gives local and global views. It spots how parts change one choice and also spots key bits across all data or models.

SHAP's strong math base means it gives steady, sure ideas. The bits it figures always match the gap between real pick and the model's normal pick. Plus, if a bit's add-on grows in a new model, SHAP makes sure its value won't drop - keeping its math strong and trusty.

LIME and SHAP are used a lot in different spots to clear up AI choices:

For setups like NanoGPT, which make high-end images, LIME and SHAP are key in getting why some things led to the final look. By giving good and clear reasons, these tools aid users in understanding moves that touch them, building more trust in AI work.

LIME and SHAP help us get why AI makes some calls, but they work in their own ways. These ways make one fit better in some cases than the other.

A big way they differ is in their explanation reach. LIME is made to look at each call on its own, only giving local views. On the other hand, SHAP looks wide, giving insights on not just one call but also on trends seen in all the data.

Next, they vary in speed of work. LIME is quick as it just looks at data close to the call at hand. This is good for when you need fast answers like in picture making. SHAP is slower as it digs deeper, great for when you need to see big trends.

Their math bases also set them apart. LIME adjusts data bit by bit to see how calls shift. SHAP uses game play math and Shapley worth, making sure its answers hold up math wise.

Lastly, how steady results are puts them apart too. LIME might give you a bit different answers on each try because of how it samples. SHAP gives steady answers, backed by strong math.

| Aspect | LIME | SHAP |

|---|---|---|

| Type of Help | Help for one thing | Help for one or all things |

| Root in Math | Guess and near try | Value game (fair shared points) |

| Speed | Quick for one guess | Slow, more so for all bits |

| Steady Help | May change each time | Same and sure each time |

| How it Scores | Rough size of help | Clear cut size of help |

| Works With | Any model (hidden way) | Any model but with extra ways |

| Best For | Fast help for one thing | Deep check and fix of model |

| Ease of Use | Simple to get and use | Needs more know-how to use |

In tools that make pictures like NanoGPT, picking between LIME and SHAP hinges on the job. LIME is great for fast chats on one picture, while SHAP digs deep into trends over many pictures or finds key things that shape creative calls.

LIME and SHAP are two well-known tools to help us understand AI work, and each comes with its own set of good and bad points. The key is to know these points well and match them to what you need.

LIME is quick and easy. It looks just at the near area around a guess, so LIME can fast explain why a choice was made. For example, in tools like NanoGPT that make pictures, LIME can show fast why some parts show up in a picture, all without slowing things down.

LIME can work with many kinds of models - like brain-like nets or tree groups - without needing to see how they work inside. This "hidden box" way means it can fit well with lots of different ways.

SHAP has a strong math base. By using ideas from a type of math about games, SHAP gives each part a value that truly shows how much it adds to a decision. These numbers always match up right, which makes SHAP solid for times when a lot is at risk.

SHAP goes beyond just one guess and gives both near and wide views. While LIME deals with one result at a time, SHAP finds big trends in your set of facts. It sees which parts matter most over all the data, giving a fuller picture. This mix of detail and being able to repeat it helps with records and wins trust from others.

LIME's use of random picks can make its answers change. Since what it says relies on different bits of data each time, you might not get the steadiness needed for rules or big choices.

A problem with LIME is it thinks parts work alone. This is too simple when parts mix, like in picture tools where things like color and feel blend into final looks.

SHAP takes a lot of computer power. Getting true SHAP numbers takes much time, more so for big, complex models. This makes SHAP tough for huge sets of facts or on-the-spot use. While there are quicker ways, they may not be as right.

Also, you need to know a lot to use SHAP well. With types like TreeSHAP, DeepSHAP, and KernelSHAP, picking and setting up the best one is key to not getting it wrong.

Both tools also struggle with data that has tons of parts, like in pictures or text. When you deal with lots of features, making clear and useful answers gets hard. These issues show why picking your tool based on how complex your model is and what your task needs is crucial.

When using AI to make images, it is key to pick the best tool to keep things smooth and to ensure users trust you. Choosing between LIME and SHAP depends on how complex your model is, what you need to explain, and the tools you have.

This choice is even more vital when you handle many types of image-making models. For example, tools like NanoGPT, which use models such as Flux Pro, Dall-E, and Stable Diffusion, gain a lot from clear ways to show how they work. Each model may need its own way to show what it does.

LIME is great for fast, specific answers. This is perfect for customer help and apps where users want quick replies. Say a user asks for an image of "sunset over mountains" but sees odd colors or parts, LIME can show what led to these unexpected results.

SHAP, on the other hand, is fit for deeper looks into complex models or to study trends in many results. If you need to know why some style prompts do well with certain models, or how your AI picks things like layout, color, or subject, SHAP's deep view on how features link is a top choice, even if it needs more computing power.

Model complexity plays a big part in this choice. For models like those based on transformers or diffusion that have lots of parts, SHAP's skill in handling deep feature links is key. These models don't just look at words in a prompt - they study how words link, how style and content advice mix, and how different parts of the network add to the final image.

Both tools help make AI clearer and address worries about privacy.

Knowing how AI makes choices is central to user trust. This is more so for systems like NanoGPT that keep data on user devices. By openly sharing how AI uses inputs and data, these systems can show the plus sides of keeping data local.

From a business view, tools that make things clear are vital for following rules and keeping AI fair. For instance, if an image your platform made causes worries, these tools let you look into it and fix what's needed.

LIME is top-notch for quick openness. When a user has a question about a certain result, you can swiftly show what in their input or which parts of the model shaped the result. This quick ability encourages trust in each talk.

SHAP backs wider efforts to be open. It lets you check trends across many results, find likely biases, and show stakeholders that your AI works in a fair and expected way. This is very useful when you deal with many AI models, as SHAP helps keep actions and standards even across your setup.

LIME and SHAP are now big in making AI clearer and safer. As AI types, like those used in making images, grow more hard, these tools help make the gap between tough codes and user knowing smaller. They turn the secret of AI choices into things that both makers and users can know.

Such methods of making things clear are key to get more people to use AI. In the U.S., plain talks about AI choices build trust and make it easy for groups to follow privacy rules. With rules on AI clearness changing, using tools like LIME and SHAP can put your group ahead in meeting these rules. More than just saving time and stuff, this way makes trust with users who like to know things and see actions.

The good points of clear AI stretch far past just meeting rules. By making it clear how choices are made, these tools let people fix problems by themselves. This is very helpful for places that make images, where users can set their needs better based on easy feedback on what shapes the AI’s result.

Clearness also helps keep user privacy safe. Look at NanoGPT as an example: it mixes making things clear with saving data right on the device to tackle two big worries - keeping data safe and AI clearness. By keeping data on the user’s own gear and giving plain talks on how AI uses info, NanoGPT lets users keep their info while knowing how it's used.

NanoGPT’s pay-when-you-use model, with saving data on devices, boosts both safety and clearness. When tools like LIME and SHAP show how types like Flux Pro, Dall-E, and Stable Diffusion deal with inputs, users feel sure that their own work stays private and safe.

This starts a change in how AI places can work. Instead of making users just trust hard systems, clear AI with local data saving lets users see just how their info is used. This way offers safety for privacy with clear, deep talks that users can get and check.

Mixing clearness and privacy is key to get more AI use. As users learn more about how their data is used, places that put both clear views and local data control first are more likely to pull more users and keep them for long.

LIME and SHAP are essential tools for shedding light on how AI models make decisions. They break down complex processes, helping to pinpoint potential biases and ensure that AI systems function in a fair and ethical manner.

These tools also help organizations navigate regulatory requirements, such as the Fair Credit Reporting Act (FCRA), by offering clarity into model behavior. By promoting accountability and transparency, LIME and SHAP play a key role in fostering trust among users and stakeholders, making them indispensable for responsible AI deployment.

When deciding between LIME and SHAP, it’s all about what you need and how complex your AI model is.

LIME shines when you’re looking for quick, local explanations for individual predictions. It’s straightforward to use and works well with simpler models or when speed is a key concern.

SHAP, on the other hand, offers both local and global explanations, giving you a deeper look into how your model behaves overall. This makes it a better fit for more complex models, though it does come with higher computational demands.

To choose the right tool, think about your model's complexity, how fast you need results, and whether you value detailed insights or quick, targeted explanations.

LIME and SHAP play a key role in shedding light on how AI models make decisions about generated images. LIME (Local Interpretable Model-agnostic Explanations) simplifies complex results by tweaking input data to determine which parts have the most impact on a specific outcome. This makes it easier to understand why a model arrived at a particular result.

SHAP (SHapley Additive exPlanations) complements this by assigning scores to individual features, highlighting how much each one contributes to the final image.

When combined, these tools offer valuable insights into an AI model's decision-making process, making it easier for users to grasp why specific elements appear in the generated images. This transparency helps build trust in the system.