Feb 10, 2026

Quantum computers will break today’s encryption - are you ready? AI platforms are at risk of having encrypted data intercepted now and decrypted later, thanks to the rapid progress in quantum computing. Post-Quantum Cryptography (PQC) offers a solution by using algorithms resistant to both classical and quantum attacks.

Here’s what you need to know:

If your AI platform handles sensitive data, transitioning to PQC is no longer optional - it’s a necessity.

AI platforms are particularly vulnerable to quantum-powered attacks, facing risks that go beyond those of traditional web applications. These systems manage two critical assets: proprietary model weights, which represent years of research and development, and vast datasets containing sensitive personal information (PII) from user interactions. The stakes are high, as the loss or compromise of these assets could lead to significant financial and reputational damage.

This isn't some far-off, hypothetical threat. As of early 2026, 87% of enterprises have yet to address the quantum vulnerabilities in their AI infrastructure. Attackers are already intercepting encrypted AI training data and model components, with plans to decrypt them when quantum computers capable of breaking current encryption standards become operational, likely between 2030 and 2035. This means that data and models intercepted today could be exploited in the future.

The UK's National Cyber Security Centre (NCSC) emphasizes the urgency: "For organisations that need to provide long-term cryptographic protection of very high-value data, the possibility of a CRQC in the future is a relevant threat now". This is especially concerning for AI platforms handling sensitive information - such as medical records, financial data, or legal documents - that often require decades of confidentiality.

AI model weights are not just data - they're intellectual property built through significant investment in compute resources, curated datasets, and engineering expertise. If these weights are intercepted during deployment, competitors could replicate proprietary features without the cost of development.

Post-quantum cryptography (PQC) offers practical tools to protect these assets. For example, ML-KEM (NIST FIPS 203) secures model weights during transmission by wrapping AES-256 symmetric keys with quantum-resistant key encapsulation. Similarly, ML-DSA (NIST FIPS 204) ensures that digital signatures used for model releases remain secure, preventing tampering or substitution. In September 2023, Signal adopted the Post-Quantum Extended Diffie-Hellman (PQXDH) protocol, combining X25519 with CRYSTALS-Kyber to bolster quantum resistance.

PQC also strengthens the AI supply chain. By transitioning to quantum-resistant digital signatures like ML-DSA, organizations can prevent attackers from forging updates or creating fake API authentication tokens. This ensures that only verified model versions are deployed in production environments.

Beyond protecting models, AI platforms must also safeguard the sensitive user data they process. Interactions on systems like NanoGPT often involve private information - ranging from business strategies to personal health questions and creative ideas.

Hybrid TLS implementations provide a strong defense by combining classical ECDHE with quantum-safe ML-KEM-768. This approach protects data both now and in the future. Apple showcased this in February 2024 with its "PQ3" protocol for iMessage, which combines Elliptic Curve cryptography with Kyber (ML-KEM) for secure key exchanges and rekeying. While NanoGPT reduces risk by storing data locally on user devices, any data transmitted for model inference still requires quantum-resistant encryption.

The urgency is undeniable. Security engineer Likhon warns: "If you're planning AI infrastructure for 2026, it must be quantum-safe. If you're securing 2024-2025 data, it's already at risk". For AI platforms handling sensitive data, adopting post-quantum cryptography isn't just about preparing for the future - it’s about protecting the data that’s vulnerable right now.

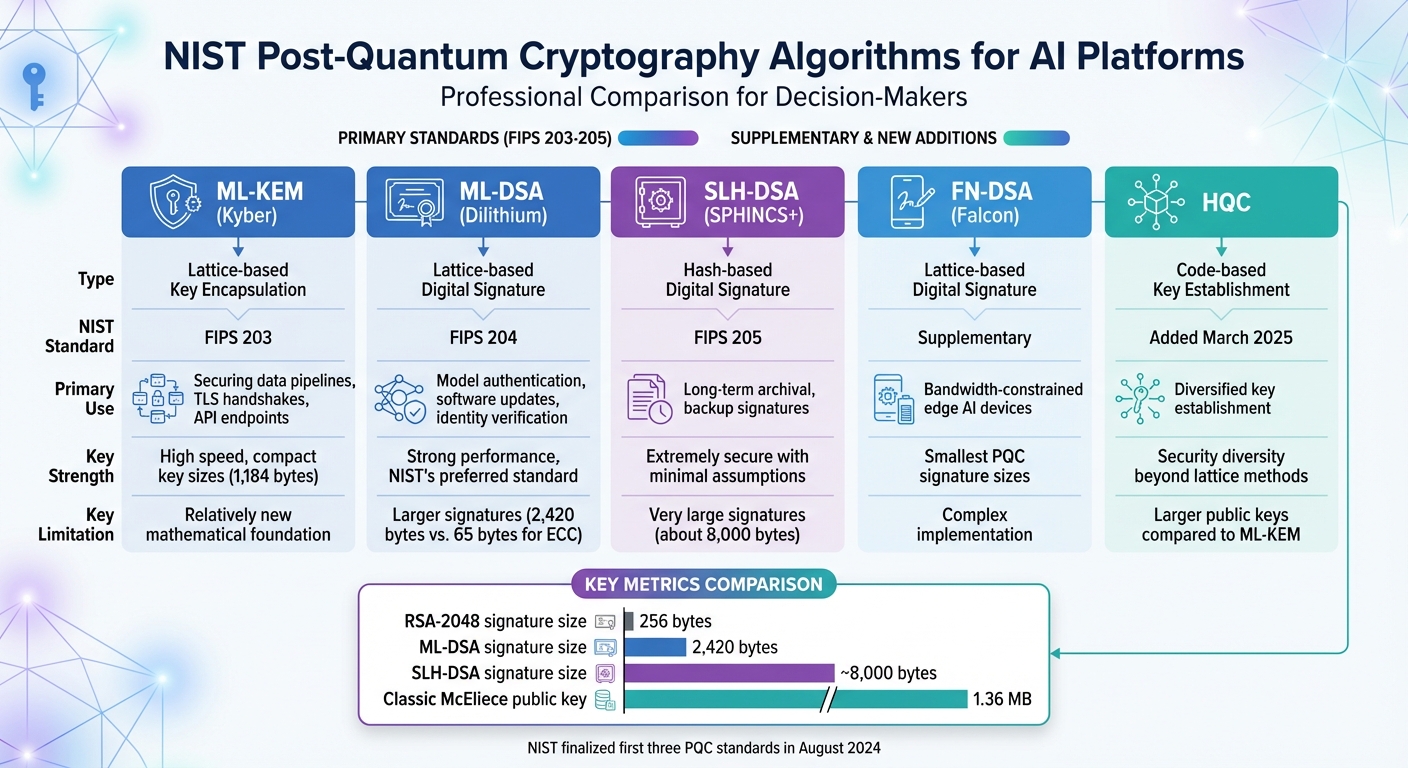

NIST Post-Quantum Cryptography Algorithms Comparison for AI Platforms

As quantum computing edges closer to mainstream reality, the need for quantum-safe encryption has become more urgent. Recognizing this, NIST introduced its first three official Post-Quantum Cryptography (PQC) standards in August 2024: FIPS 203 (ML-KEM), FIPS 204 (ML-DSA), and FIPS 205 (SLH-DSA). These standards are designed to protect against both classical and quantum threats, including those enabled by Shor's algorithm. According to NIST, "the two digital signature standards (ML-DSA and SLH-DSA) and key-encapsulation mechanism standard (ML-KEM) will provide the foundation for most deployments of post-quantum cryptography. They can and should be put into use now". With plans to phase out quantum-vulnerable algorithms by 2030 and completely eliminate them by 2035, organizations - especially those managing sensitive AI data - have limited time to make the transition.

The three NIST standards each serve specific roles in securing AI platforms.

NIST also includes two supplementary algorithms in its portfolio. FN-DSA (formerly Falcon) is a lattice-based signature scheme known for its small signature sizes, though it requires complex floating-point math for implementation. Meanwhile, HQC, a code-based key-establishment algorithm added in March 2025, broadens the cryptographic foundation beyond lattice-based methods.

AI workloads often demand specific cryptographic features, such as speed, compact key sizes, or robust security. Here's a breakdown of how these algorithms cater to different use cases:

| Algorithm | Type | Primary Use in AI | Key Strength | Key Limitation |

|---|---|---|---|---|

| ML-KEM (Kyber) | Lattice-based Key Encapsulation | Securing data pipelines, TLS handshakes, API endpoints | High speed, compact key sizes (1,184 bytes) | Relatively new mathematical foundation |

| ML-DSA (Dilithium) | Lattice-based Digital Signature | Model authentication, software updates, identity verification | Strong performance, NIST's preferred standard | Larger signatures (2,420 bytes vs. 65 bytes for ECC) |

| SLH-DSA (SPHINCS+) | Hash-based Digital Signature | Long-term archival, backup signatures | Extremely secure with minimal assumptions | Very large signatures (about 8,000 bytes) |

| FN-DSA (Falcon) | Lattice-based Digital Signature | Bandwidth-constrained edge AI devices | Smallest PQC signature sizes | Complex implementation |

| HQC | Code-based Key Establishment | Diversified key establishment | Security diversity beyond lattice methods | Larger public keys compared to ML-KEM |

These algorithms are already being adopted in real-world scenarios. For instance, in August 2023, Google and ETH Zürich introduced a FIDO2 security key that combines ECC and Dilithium signatures. This collaboration highlights how post-quantum cryptography is no longer just a theoretical concept - it’s being integrated into production-ready solutions that AI platforms can deploy today.

Transitioning to post-quantum cryptography (PQC) might sound complex, but it begins with identifying your encryption vulnerabilities. For platforms like NanoGPT, which handle sensitive user data and interact with various AI services, this shift requires careful planning. However, it’s a critical step to safeguard against future quantum threats. Here’s how you can systematically upgrade your platform’s encryption.

Start by creating a Cryptographic Bill of Materials (CBOM) - essentially an inventory of where and how your platform uses public-key cryptography. This includes areas like TLS termination for API endpoints, encryption of model weights, service-to-service mutual TLS (mTLS), and the protection of user authentication tokens. A complete and accurate CBOM is your foundation for a successful migration.

Once you’ve mapped out your cryptographic dependencies, prioritize upgrades based on your platform’s threat model. A key first step is enhancing transport privacy through hybrid post-quantum key agreements. This involves using hybrid Key Encapsulation Mechanisms (KEM), such as X25519+Kyber768 (ML-KEM). These mechanisms ensure data remains secure as long as at least one algorithm in the pair remains unbroken.

For AI platforms, securing model weights is crucial. Use a hybrid key wrapping approach: encrypt data with AES-256, then wrap the key using both classical cryptography (RSA/ECC) and ML-KEM algorithms. This layered strategy strengthens model security.

Roll out these changes gradually through phased deployments. Start with a small percentage of traffic (1–5%) to test for handshake success, latency, and CPU usage. Hybrid post-quantum TLS typically increases CPU usage by a small margin per handshake, so allocate an additional 10–20% CPU headroom during initial testing.

To future-proof your platform, incorporate crypto-agility into your architecture. Avoid hardcoding cryptographic algorithms; instead, configure them dynamically. This approach makes it easier to switch algorithms or revert to classical encryption if needed, without rewriting code. For platforms like NanoGPT, which store data locally on user devices, this flexibility is particularly important to ensure seamless updates.

"If you do nothing else in 2025, enable hybrid TLS. It's the cleanest, least disruptive step to inoculate your transport confidentiality against future quantum advances." - DebuggAI

For compatibility, retain classical ECDSA signatures on server certificates while upgrading only the key exchange to hybrid mode. This ensures existing clients remain functional while adding quantum resistance where it’s most critical. Additionally, consider dual-signing software artifacts and container images with both classical ECDSA and post-quantum ML-DSA. This protects your AI supply chain without disrupting current verification tools.

Once hybrid cryptography is in place, AI can play a significant role in refining security and performance. For platforms handling sensitive data, AI-driven optimizations are essential to maintain robust protection.

Machine learning models can dynamically adjust encryption parameters based on real-time network conditions. For example, an AI-powered policy engine can decide whether to use ML-KEM or fall back to classical algorithms, depending on client capabilities, latency needs, and threat intelligence.

AI also enhances cryptographic agility management. Instead of manually configuring algorithms for different scenarios, AI systems can automatically switch between PQC algorithms or hybrid methods as threats evolve. If unusual patterns or errors suggest a potential cryptanalytic attack, the system can instantly adapt its cryptographic defenses.

Beyond agility, AI enables advanced threat detection tailored to quantum risks. Machine learning models can identify quantum-enabled attack patterns, such as the rapid credential trials associated with Grover's search algorithm. This early detection provides a critical window to respond before an attack succeeds.

Platforms managing large-scale cryptographic operations can leverage AI for side-channel auditing. AI models can detect vulnerabilities like timing attacks or power consumption leaks before they’re exploited. Neural networks have shown dramatic speed improvements - up to 1,000× faster than brute-force methods - for optimizing key rates in quantum protocols. This makes them invaluable for fine-tuning security in production environments.

In practice, deploy machine learning models to monitor network traffic for anomalies. Metrics like handshake failure rates, unexpected drops in hybrid negotiation ratios, or spikes in connection errors can signal interference or attacks. For platforms like NanoGPT, where even slight latency impacts user experience and costs, AI-driven optimization ensures quantum resistance without compromising performance.

Post-quantum cryptography brings some hefty challenges, especially when it comes to performance and key size. One major issue is that the keys and signatures in these systems are significantly larger than those used in classical cryptography. For example, RSA-2048 signatures are about 256 bytes, but post-quantum options like ML-DSA and SLH-DSA can balloon to 2 KB or more. Some hash-based variants even reach a staggering 17 KB in size. The Classic McEliece-8192128 algorithm is another example, requiring public keys around 1.36 MB.

These larger keys and signatures put extra pressure on storage and bandwidth. AI platforms that handle encrypted model weights or process thousands of API requests per second may see increased network traffic, which can lead to packet fragmentation. This might require adjustments to Maximum Transmission Unit (MTU) sizes and TLS record limits. On top of that, lattice-based algorithms like ML-KEM demand significant computational power. For instance, a single key encapsulation involves roughly 10,000 modular multiplications over 256-coefficient polynomials, far exceeding the resource demands of traditional RSA or ECC operations. This can create bottlenecks for resource-constrained systems, such as IoT devices or edge AI platforms.

For platforms like NanoGPT, where fast response times and cost efficiency are critical, even slight increases in latency or CPU usage can ripple into bigger issues. Addressing these performance hurdles is essential to keep AI platforms running smoothly and efficiently.

AI itself offers some creative ways to tackle these post-quantum cryptography (PQC) challenges. Machine learning models, for instance, can dynamically adjust cryptographic parameters in real time based on network conditions and emerging threats. This kind of optimization has already demonstrated noticeable improvements in the speed of cryptographic operations.

AI also plays a key role in identifying and preventing side-channel attacks. While PQC algorithms are designed to resist quantum threats mathematically, their implementations can still leak information through things like power usage or timing variations. Attackers can exploit these vulnerabilities, but neural networks can detect subtle patterns in these leaks before they become an issue. Additionally, AI-driven anomaly detection tools can flag unusual query patterns or error rates that might indicate quantum-based attacks, such as Grover-style rapid credential trials. A great example of AI’s potential here is the ADAPT-GQE framework, which uses a transformer-based approach to speed up training data generation for complex systems by 234×.

"We encourage system administrators to start integrating them into their systems immediately, because full integration will take time." - Dustin Moody, PQC Project Head, NIST

Hardware solutions like FPGAs and ASICs can also help by speeding up modular arithmetic and easing memory bandwidth constraints. Meanwhile, AI-powered orchestration layers can automate the deployment of PQC across complex environments. These tools ensure that platforms like NanoGPT remain quantum-resistant while still delivering a seamless experience to users.

The quantum threat is no longer a distant possibility. Adversaries are already stockpiling encrypted data, anticipating the arrival of quantum computers between 2030 and 2035. For AI platforms managing sensitive user data and intellectual property, the time to act is now.

Shifting to post-quantum cryptography is a complex, multi-year process that involves a detailed inventory, protocol overhauls, and significant infrastructure updates. With NIST set to finalize the first three post-quantum cryptography (PQC) standards on August 13, 2024, and a staggering 87% of enterprises still unprepared by early 2026, the urgency cannot be overstated. Platforms like NanoGPT, which prioritize user privacy through local data storage, must adopt quantum-resistant encryption to protect their AI models and user data for the next two decades. Acting now not only ensures security but also creates a foundation for future competitiveness.

To start, implement hybrid key encapsulation mechanisms (KEMs) for immediate protection without disrupting operations. Conduct a cryptographic inventory, focus on securing high-value assets like model weights and training data, and integrate hybrid TLS for API traffic. Additionally, the quantum-safe digital asset market is expected to hit $16 trillion by 2030, making this transition not just a security imperative but also a strategic opportunity for growth.

The 'harvest now, decrypt later' (HNDL) risk is a growing concern in cybersecurity. It describes a scenario where attackers steal encrypted data today, intending to decrypt it in the future when quantum computers become powerful enough to break current cryptographic methods. This could compromise sensitive information like proprietary AI models, customer data, and private communications, creating serious long-term security challenges in a quantum-enabled future.

Systems that deal with sensitive information should be the first to implement post-quantum cryptography. This includes platforms managing encrypted messages, API tokens, and model weights. By focusing on these areas, organizations can protect critical data from potential quantum computing threats.

When integrating hybrid post-quantum cryptography (PQC) into API or TLS connections, there might be a small increase in computational demands, which could slightly extend connection times. However, with proper implementation, it strengthens security while keeping performance impacts minimal.