Feb 1, 2026

Role-Based Access Control (RBAC) is a practical way to protect sensitive AI-generated image data by restricting access based on job roles. This approach ensures that only authorized individuals or systems can interact with specific data, reducing risks like insider threats, data leaks, and misuse.

Here’s why RBAC matters:

RBAC works by assigning permissions to roles (e.g., "Data Scientist") instead of individuals, simplifying management and ensuring granular control over who can access what. For example:

Compared to other access methods like Discretionary Access Control (DAC) or Mandatory Access Control (MAC), RBAC is scalable and effective for managing complex AI workflows. It also helps prevent lateral movement in case of breaches, limiting potential damage.

To implement RBAC:

Maintaining RBAC involves regular audits, monitoring access logs, and updating policies as roles or team structures change. Tools like NanoGPT support RBAC with features like API-key authentication, role-based model access, and local data storage options to enhance control over sensitive AI-generated images.

RBAC is a straightforward yet effective way to secure AI image data, safeguarding your organization from costly breaches and misuse.

RBAC, or Role-Based Access Control, operates on a straightforward concept: access levels are determined by roles. Instead of assigning permissions directly to individuals, permissions are linked to roles (like "Data Scientist" or "ML Engineer"), which are then assigned to users. As the FINOS AI Governance Framework explains, "Role-Based Access Control (RBAC) is a policy-neutral access control mechanism defined around roles and privileges".

For any access request to be approved, three conditions must be met: the user must have an assigned role, the role must be authorized, and the role must include the necessary permissions. If any of these conditions aren't satisfied, access is denied. This systematic approach ensures users only interact with AI-generated images or data relevant to their responsibilities, creating a solid foundation for secure operations.

RBAC revolves around three main components:

Permissions are grouped into roles, which are then assigned to subjects - this includes both human users and non-human entities like MLOps pipelines, service accounts, or even AI models. Assignments are often made at the project or resource level. For instance, a "Data Scientist" role might have access to training datasets within a "Product Design" project but not in a "Medical Imaging" project.

In AI systems, permissions can get incredibly detailed. They might limit access to specific actions, like using a DALL-E playground for image generation or reading only certain subsets of training data. This granularity helps safeguard the confidentiality and integrity of data throughout its lifecycle.

When comparing access control methods, RBAC stands out for its scalability, especially in complex AI workflows involving diverse teams. Here's how it compares to other approaches:

The standout benefit of RBAC is its ability to scale. IBM highlights that "by limiting access to sensitive data, RBAC helps prevent both accidental data loss and intentional data breaches. Specifically, RBAC helps curtail lateral movement". If an attacker gains access to a legitimate account, their reach is restricted to what that account's role allows, reducing the risk of widespread damage.

| Method | How Access Is Determined | Best Use Case |

|---|---|---|

| RBAC | Based on predefined roles and job functions | Large organizations needing structured, scalable management |

| DAC | At the discretion of resource owners | Small teams or highly flexible environments |

| MAC | Centrally defined policies based on clearance levels | Secure environments like government or military systems |

For AI image data, RBAC ensures that data accessed through natural language queries remains within authorized boundaries. This level of control not only governs initial access but also manages what data can be retrieved and shared later. By securing every stage - from training datasets to final image outputs - RBAC proves to be a reliable method for managing access in AI systems.

3-Step RBAC Implementation Process for AI Image Data Security

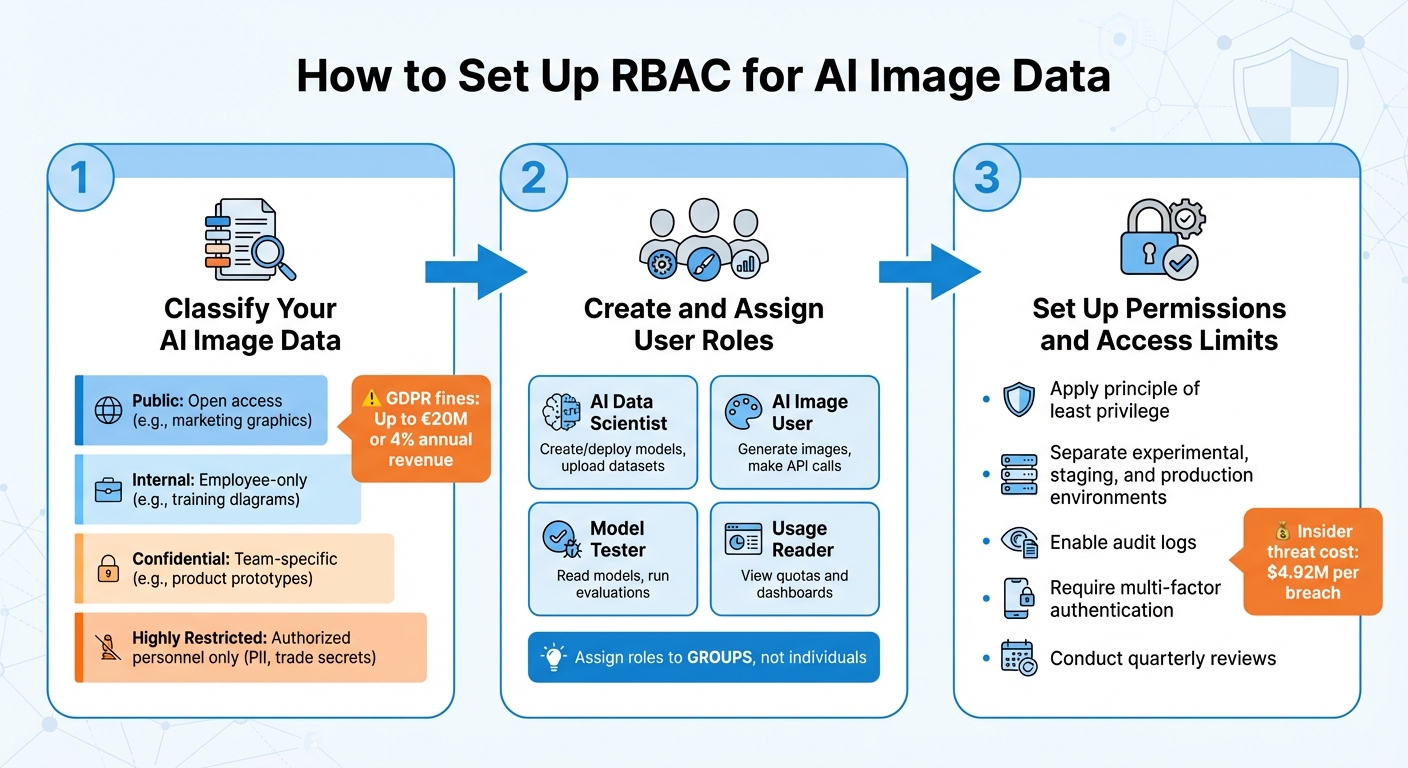

To implement Role-Based Access Control (RBAC) for AI image data, follow three key steps: classify your data, assign roles, and configure permissions carefully.

Start by categorizing your AI image data based on its sensitivity. Use the following four tiers:

Leverage AI tools to scan metadata and detect sensitive patterns, including personally identifiable information (PII) or proprietary data. Contextual clues also help - files stored in a "Legal" folder or created by Product R&D, for example, should be flagged as higher sensitivity.

A practical example comes from Cybage Software Private Limited, which implemented RBAC for its "SmartPal" GenAI application in December 2024. Using SharePoint repositories, access was tailored: Sales and Management teams had full access to financial details, while others could only view masked data. PII masking ensured that unauthorized users couldn’t see client names or logos.

To cover all bases, maintain an inventory of AI-related assets, such as datasets, model files, and configurations. This ensures no data falls outside the RBAC framework. Failing to safeguard sensitive data could result in hefty GDPR fines - up to €20 million or 4% of annual revenue.

With your data categorized, the next step is defining user roles.

After classifying data, identify all entities needing access. This includes not only people - like Data Scientists or ML Engineers - but also non-human entities, such as AI models, pipelines, and service accounts.

Document each role’s specific access needs. For example, a "Model Tester" might need only read and evaluation permissions, while an "AI Data Scientist" requires broader access to create, deploy, and manage models. Use an access control matrix to map roles to data categories, clarifying which roles can access training datasets versus generated images.

| Role Name | Permissions/Capabilities | Typical User |

|---|---|---|

| AI Data Scientist | Create/deploy models, upload datasets, read/write files | ML Engineers, Data Scientists |

| AI Image User | Generate images, view deployments, make API calls | Business Analysts, End Users |

| Model Tester | Read models, request capabilities, run evaluations | QA Engineers, Auditors |

| Usage Reader | View quotas and usage dashboards | Finance, Project Managers |

To simplify management, assign roles to groups rather than individuals. For example, group users into "Research" or "Support" teams. Also, delegate admin roles to at least two people to avoid disruptions if one leaves the organization. Keep in mind that role changes may take up to 30 minutes to propagate across cloud platforms.

After assigning roles, configure permissions to follow the principle of least privilege. This means granting users only the access they need for their tasks, adding permissions only when absolutely necessary. As the FINOS AI Governance Framework puts it:

"Role-Based Access Control (RBAC) is a fundamental security mechanism designed to ensure that users, AI models, and other systems are granted access only to the specific data assets and functionalities necessary to perform their authorized tasks."

Implement safeguards like separating tasks so that the person requesting a change isn’t the one approving it. This reduces the risk of insider threats, which have an average breach cost of $4.92 million - higher than the overall average of $4.44 million.

Apply RBAC consistently across repositories, AI/ML platforms, APIs, and applications. For instance, in tools like NanoGPT, permissions should restrict who can generate images or access outputs from DALL-E or Stable Diffusion. Require multi-factor authentication for human users and managed credentials (like API keys) for non-human entities.

To minimize risks, separate experimental, staging, and production environments into distinct projects. Enable audit logs to track access attempts and conduct regular reviews - such as quarterly audits - to remove permissions for users who’ve changed roles or left the organization. Test access with non-owner accounts to confirm users only see what they need.

Setting up Role-Based Access Control (RBAC) is only the first step. Over time, permissions can drift - users may hold onto access they no longer need, outdated roles might linger, and unnoticed gaps can weaken security. Regular maintenance is critical to avoid these pitfalls and keep your RBAC policies effective. This involves consistently reviewing roles, monitoring access logs, and updating policies as your organization evolves.

Schedule quarterly reviews where data owners or managers verify all access rights. This helps ensure users still need their current permissions, catching issues like employees who’ve switched teams but retained old access, or service accounts that were never deactivated.

Stick to the principle of least privilege during these reviews. Users and AI models should only have the permissions necessary for their current tasks. Automate this process using your Identity Provider (IdP) with SCIM (System for Cross-domain Identity Management) to sync group memberships as personnel change. For example, if someone leaves the "Research" team, their access should update automatically without manual intervention.

Keep your access control matrix up-to-date to reflect current roles and permissions. Be mindful of inherited permissions - for instance, a project-level role might unintentionally grant broader access at the organization level. Test these inheritance patterns periodically using a non-owner account to confirm they work as expected.

Act quickly to revoke unnecessary access when roles change or employees leave. Always ensure at least two people hold administrative roles - this avoids losing access to critical resources if a single admin departs unexpectedly. Also, be aware that role changes can take up to 30 minutes to propagate across systems, so plan accordingly.

Once roles are reviewed, the next step is to keep a close eye on access activity.

Tracking access logs in real-time is essential for spotting unusual activity. These logs should capture details like the identity of the actor (human or AI), the specific data accessed, the type of operation (read, write, delete), and a precise timestamp. The FINOS AI Governance Framework underscores the importance of this:

"All access attempts, successful or failed, should be logged to ensure auditability and support security monitoring."

Set up automated alerts for high-risk actions. For example, configure notifications whenever someone is assigned Organization Admin or Super Admin privileges. These roles carry significant authority, so any changes should trigger immediate scrutiny. As Google Cloud advises:

"Use Cloud Audit Logs to monitor for high-risk activity, such as accounts being granted high-risk roles like Organization Admin and Super Admin. Set up alerts for this type of activity."

Export your audit logs to external storage, like Cloud Storage, to maintain long-term records for compliance purposes. Use "last accessed" data to identify permissions or credentials that haven’t been used in months - these are candidates for removal. Pay special attention to service account keys and API credentials, auditing their usage to detect potential security breaches.

As your organization grows and evolves, so must your RBAC policies. Assign roles to groups rather than individuals and use SCIM with your IdP to automatically update group memberships. For instance, if your marketing team grows from five to fifteen members, you’ll only need to adjust the group membership, not individual permissions.

When modifying policies through scripts, rely on unique role IDs rather than role names. This ensures your automation remains functional even if a role name changes - for example, if "Data Scientist" becomes "ML Researcher".

Before rolling out any policy changes, test them with a Policy Simulator. This prevents accidental disruptions to necessary access. For temporary elevated permissions, consider tools like Privileged Identity Management, which provide Just-In-Time (JIT) access. These permissions automatically expire after the task is completed, eliminating the need for manual cleanup. Additionally, limit the number of subscription owners to three or fewer to reduce risks tied to compromised high-level accounts.

NanoGPT integrates Role-Based Access Control (RBAC) through API-key authentication and local data storage. By using Authorization: Bearer <API_KEY> headers, you can control access to image generation endpoints. This setup lets you assign separate API keys for different roles or projects, ensuring that access to your account balance and model capabilities is limited to authorized users.

The user field in API requests acts as a unique identifier for end-users, helping you monitor and restrict usage at an individual level. Alongside this, data like cost and remainingBalance - included in every API response - enables you to audit activity and verify that it aligns with assigned roles and budgets. Considering that 23% of companies have reported incidents where AI agents were tricked into revealing credentials, such tracking is critical for identifying unusual behavior. Let’s now explore how to restrict access to specific AI models and image formats.

NanoGPT’s API-level controls allow you to assign model access based on user roles. For instance, you can map specific roles to certain model IDs in your RBAC system. Junior team members might be limited to basic models like hidream, while senior staff could access premium models such as flux-kontext or gpt-4o-image. This approach reduces unauthorized usage of advanced models and minimizes potential security risks.

When generating images, NanoGPT provides two output options: base64-encoded strings (b64_json) or time-limited signed URLs (url), which expire after about one hour. For sensitive data, b64_json is preferable since it avoids exposure via public URLs, even briefly. To enhance security further, regularly rotate Bearer tokens to reduce the risk of credential theft. This is especially important given projections that 75% of AI security incidents by 2025 will stem from unauthorized access. Additionally, NanoGPT offers a local storage option to maintain tighter control over sensitive image data.

NanoGPT’s local storage feature strengthens RBAC by keeping image data within your organization’s infrastructure. Instead of relying on the platform’s cloud storage, you can request images as base64 strings, ensuring they are delivered directly to your local environment without being persistently stored on NanoGPT’s servers.

For signed URLs, immediate download is crucial since these links expire within 60 minutes. Once downloaded, store the images in RBAC-protected directories on your infrastructure, where access can be managed based on user roles. NanoGPT’s pay-as-you-go model complements this setup by offering real-time cost tracking, allowing you to manage API keys and user identifiers effectively. This ensures that only authorized roles can perform actions that impact your account balance.

RBAC plays a key role in stopping unauthorized access to AI-generated images by assigning permissions based on specific job roles. When paired with Segregation of Duties, it ensures no single individual can oversee all aspects of image generation, storage, and distribution. This layered approach is a crucial defense against insider threats and potential misuse of data.

NanoGPT employs advanced RBAC methods designed for scalable, user-specific controls. These include Bearer token authentication, API-level restrictions, and base64-encoded image delivery for local storage. These measures safeguard workflows by limiting access to premium model features and account balances to authorized users only. Additionally, role-specific API key assignments ensure that only designated team members can generate images using premium models or view sensitive account information.

Audit logging adds another layer of protection by providing visibility into all access attempts - successful or otherwise. This tracking helps administrators spot unusual activity, such as potential credential theft or unauthorized access, allowing for swift action when needed. Together with comprehensive monitoring, these measures enhance overall security.

Role-based access control (RBAC) strengthens the security of AI-generated image data by assigning permissions based on clearly defined user roles. This approach ensures that individuals can only access the data and tools necessary for their specific responsibilities, significantly lowering the chances of unauthorized access or accidental misuse.

What sets RBAC apart from other access control methods, like discretionary or mandatory access control, is its structured and scalable design. By clearly outlining roles and restricting access accordingly, organizations can safeguard sensitive AI-generated images without sacrificing efficiency in their operations.

Role-based access control (RBAC) helps secure AI-generated image data by limiting access to only those who need it. The idea is simple: assign permissions based on specific job responsibilities. For instance, a data scientist might require access to analyze image datasets, while an administrator handles system configurations.

To make RBAC work effectively, start by defining clear roles and matching them with the appropriate permissions. Use the principle of least privilege - this means giving users only the access they need to do their job, nothing more. Over time, roles or security needs may change, so it’s important to review and update permissions regularly.

Another key step is tracking access logs. Monitoring these logs can help spot any unauthorized activity, ensuring your data stays protected and its integrity is maintained. Following these practices can significantly reduce the risk of misuse or unauthorized access to your AI-generated image data.

Keeping role-based access control (RBAC) policies up to date is crucial for maintaining security and managing access effectively. Over time, users can accumulate permissions they no longer need - a problem known as privilege creep. This can create security gaps, leaving your systems vulnerable to misuse or breaches.

Regularly reviewing and updating roles, permissions, and user assignments ensures that access stays aligned with current job responsibilities and security requirements.

To make this process more efficient, consider these strategies:

These practices help minimize risks, maintain compliance with security standards, and protect sensitive AI-generated image data from falling into the wrong hands.