Jan 7, 2026

Real-time data streams are transforming industries by enabling instant decision-making in areas like autonomous vehicles, fraud detection, and healthcare. But this speed comes with serious security risks. Encryption delays, data leakage, and vulnerabilities in autonomous systems all pose threats, especially as global data volume grows to 175 zettabytes by 2025. Key takeaways include:

To mitigate these risks, organizations should focus on selective encryption, edge computing, robust access controls, and continuous monitoring. Trusted Execution Environments and obfuscation techniques are practical solutions for balancing security with performance. Security isn't just a one-time fix - it's a process requiring constant vigilance.

Encryption is essential for securing real-time data streams, but it comes with a trade-off. It uses CPU and GPU resources, which can lead to processing delays. For AI systems that rely on instant decision-making, even minor delays can cause significant problems. Take autonomous vehicles, for instance - just a few seconds of delay in data analysis could render the system ineffective.

The issue becomes even more pronounced when data has to travel long distances. For example, a data packet sent from Farmingdale, NY, to a server in Trenton, NJ, takes about 10-15 milliseconds. But if the same packet originates from Denver, CO, the delay can stretch to 50 milliseconds. Adding encryption overhead to this already-present network latency further slows down data transmission. With global data volume expected to hit 175 zettabytes by 2025, the scale of this challenge is only growing, amplifying the latency issues discussed here.

Encryption doesn’t just delay data transmission - it also introduces additional layers of computational work. Encrypting and decrypting data requires processing power, which is the primary source of delay. Beyond that, delays can occur during disk I/O operations, where encrypted data is written to or read from storage. This is slower compared to processing data directly in memory. Other factors, like network bottlenecks caused by physical distances and serialization overhead (e.g., converting data formats such as Python user-defined functions), also add to the latency.

To address these challenges, many organizations are turning to in-memory frameworks like Apache Flink and Spark Streaming. These tools eliminate the delays caused by disk I/O by keeping data in memory. Another effective strategy is edge computing, which processes data closer to its source. By filtering and preprocessing data locally, edge computing reduces the need to send raw data to the cloud, cutting down on network latency. Companies like Apple and Samsung have adopted this approach, integrating AI features that process encrypted data directly on devices. This not only preserves user privacy but also minimizes delays tied to cloud-based processing.

Finding the right balance between security and performance is a constant challenge. Encryption choices directly impact both confidentiality and speed. End-to-end encryption (E2EE) offers the highest level of security, but it creates a dilemma for AI systems that need access to plaintext data for tasks like processing and training. As researchers from New York University explain:

Using end-to-end encrypted content to train shared AI models is not compatible with E2EE.

This incompatibility forces organizations to make some tough decisions.

Selective encryption is one compromise. For instance, messaging apps often use selective encryption for features like link previews, leaving certain parts unencrypted for processing. While this improves speed and user experience, it technically violates the strict confidentiality of E2EE. Other approaches, like Homomorphic Encryption, allow computations on encrypted data but come with significant performance costs. Trusted Execution Environments, on the other hand, provide near-hardware-level speeds with built-in security, though they lack the full cryptographic guarantees of pure encryption.

For real-time AI in E2EE systems, endpoint-local processing is often the most practical solution. Processing data entirely on the user’s device avoids breaking encryption and eliminates cloud-related latency. However, this approach is limited by the device’s computational capabilities.

Real-time data streams come with vulnerabilities that traditional security systems often fail to catch. With unstructured data making up 90% of organizational data, tracking sensitive information becomes a significant challenge. This creates a perfect storm for data leakage, especially when AI models process information faster than security teams can keep up. These risks only add to the encryption trade-offs discussed earlier.

Large Language Models (LLMs) have a tendency to memorize sensitive data, and the risk grows as these models increase in size and training data. When LLMs handle real-time streams, there’s a chance they could expose Personally Identifiable Information (PII), either during regular interactions or through targeted extraction attacks.

Retrieval-Augmented Generation (RAG) pipelines also present risks. Without strict access controls, they can unintentionally expose restricted documents. Microsoft’s Participant-Aware Access Control framework highlights this issue. Researchers at Microsoft noted:

Models fine-tuned on sensitive information - such as HR records, internal policies or high-impact business strategies - may inadvertently reveal this information when users interact with the AI tools... particularly in environments with heterogeneous access privileges.

Another growing concern is Cross-Prompt Injection Attacks (XPIA), which can reveal sensitive data. This risk becomes more pronounced as organizations move from human-reviewed processes to fully autonomous systems, removing critical manual oversight.

Converting unstructured files into vector embeddings for semantic searches adds another layer of vulnerability. PII snippets can leak during these searches, and while defenses like prompt sanitization and output filtering exist, they are not foolproof. Sophisticated attackers can still bypass these measures using advanced extraction techniques.

Closing these security gaps is essential for protecting real-time data streams in AI systems.

High-speed data streams also risk leaking metadata, which attackers can exploit to infer sensitive information, even in TLS-encrypted traffic. Researchers Geoff McDonald and Jonathan Bar Or demonstrated this with an attack called "Whisper Leak." Their method achieved near-perfect classification - often exceeding 98% AUPRC - of user prompt topics by analyzing encrypted LLM streaming traffic. As they explained:

This industry-wide vulnerability poses significant risks for users under network surveillance by ISPs, governments, or local adversaries.

A lack of robust governance frameworks exacerbates these issues. Alarmingly, 48% of global CISOs have expressed concerns about AI-related security risks. Yet, sensitive data is still frequently processed without proper access controls or monitoring. The problem worsens when organizations rely on synthetic data streams to protect privacy, as these streams often fail against membership inference attacks. For example, differential privacy measures can degrade data quality significantly, and the "TraceBleed" attack has outperformed earlier baselines by 172%.

To mitigate these risks, organizations must adopt deterministic access controls that enforce permissions at every stage of the data pipeline. These controls should be paired with context-aware firewalls, pre-ingestion data sanitization, and real-time monitoring to ensure that the speed of processing doesn’t compromise security.

Autonomous AI agents bring a unique set of security challenges, especially when handling real-time data streams. Unlike traditional software, these systems make decisions on the fly. This dynamic nature means that even a single compromised input can lead to a chain reaction of failures. The shift from human oversight to fully autonomous operations has removed a critical layer of review, making these vulnerabilities even more concerning. Unfortunately, these risks are no longer just theoretical - numerous real-world incidents have confirmed their seriousness.

Recent attacks have highlighted the dangers of how autonomous agents handle untrusted data. For example, in February 2025, researchers from the University of Maryland and NVIDIA exploited the ChemCrow agent by embedding a malicious ArXiv paper. This paper manipulated the agent into turning benign instructions into a recipe for a toxic nerve gas.

Similar risks have surfaced in financial systems. In October 2025, Palo Alto Networks' Unit 42 demonstrated an "Agent Session Smuggling" attack. Here, a malicious research assistant exploited stateful communication protocols to trigger an unauthorized trade. Researchers Jay Chen and Royce Lu explained the danger:

A rogue agent is a far more dynamic threat. It can hold a conversation, adapt its strategy and build a false sense of trust over multiple interactions.

The scale of these vulnerabilities became evident during a large-scale red-teaming competition in early 2025. Participants launched 1.8 million prompt injection attacks against 22 advanced AI agents, leading to 60,000 policy violations. Alarmingly, most agents succumbed to violations within just 10–100 queries, with indirect prompt injections succeeding 27.1% of the time.

Further testing revealed additional flaws in agents like Anthropic's Computer Use agent and the MultiOn web agent. Researchers used Reddit posts to redirect these agents to malicious sites, causing them to leak sensitive user data - including credit card numbers - in 10 out of 10 trials. These agents mistakenly trusted platforms like Reddit, assuming the data was safe to process.

The lack of runtime guardrails exacerbates these security challenges. Many autonomous agents operate without mechanisms to verify the integrity of real-time data streams before acting on them. NVIDIA researcher Shaona Ghosh highlighted this issue:

Safety and security are not merely fixed attributes of individual models but also emergent properties arising from the dynamic interactions among models, orchestrators, tools, and data.

Non-deterministic behavior in these agents leads to unpredictable outcomes, making it difficult to identify and resolve security failures. This unpredictability leaves systems open to unintended control amplification. Similar to the delays caused by encryption overhead, the absence of runtime safeguards amplifies security risks.

To address these issues, organizations need to implement robust technical controls, such as:

Without these safeguards, autonomous systems remain vulnerable to manipulation and data integrity failures. Continuous validation and monitoring are essential for securing these agents in real-world applications.

Real-time data streams don't just face threats from within an organization - they're also vulnerable to external risks. One major concern is the involvement of third-party vendors. When businesses depend on external AI platforms and services, they open themselves up to risks that are often beyond their direct control. To put this into perspective, 98% of organizations have at least one third-party partner that has experienced a breach, and 61% reported a third-party vendor breach in 2023. With 60% of organizations working with more than 1,000 third-party vendors, these risks grow exponentially. These external threats add another layer of complexity to encryption and agent-related challenges already discussed.

The supply chain itself has become a direct target for attackers. By tampering with open-source pre-trained models, bad actors can inject misinformation or malicious code that spreads across the supply chain. A documented example of this is the "PoisonGPT" case study within the MITRE ATLAS framework. It revealed how attackers compromised third-party resources to distribute false information and create harmful content through manipulated large language models.

The OmniGPT breach in February 2025 showcased the devastating effects of such vulnerabilities. In this incident, a threat actor claimed to have leaked 34 million user conversations and 30,000 personal records, including sensitive corporate discussions, billing details, and user credentials. Beyond the immediate data exposure, the breach underscored the dangers of model poisoning - leaked training data could be weaponized to influence future AI responses. Alarmingly, the AI-driven cybercrime market is expected to balloon from $24.82 billion in 2024 to $146.5 billion by 2034.

Nation-state actors have also been quick to exploit these weaknesses. Advanced Persistent Threat (APT) groups from countries like Iran, China, North Korea, and Russia are increasingly leveraging AI for tasks like reconnaissance and automating malicious payload delivery. A report by Google on the misuse of generative AI highlighted:

Rather than enabling disruptive change, generative AI allows threat actors to move faster and at higher volume.

The growing integration of AI into tools like Microsoft 365 and Google Workspace has significantly expanded the attack surface. Emerging technologies, such as "Shadow MCP (Model Context Protocol) Servers", further complicate matters. These servers allow AI systems to execute commands, modify files, and send messages without proper organizational oversight, creating serious security gaps. Unsurprisingly, 54% of CISOs identify AI as a major security risk on widely used collaboration platforms like Slack, Teams, and Microsoft 365.

Static security questionnaires are no longer enough to address these risks. Instead, organizations must adopt continuous monitoring and threat response strategies. Monitoring both the internet and dark web for signs of vulnerabilities, data breaches, or leaked credentials is essential. For instance, the CrowdStrike incident on July 19, 2024, highlighted the importance of strong "Threat and Vulnerability Response" strategies to mitigate risks tied to third-party software updates.

To validate third-party data handling effectively, organizations should implement several key measures:

Ultimately, security is a shared responsibility. Organizations cannot simply rely on third-party providers to handle everything. They must actively verify that their partners meet security obligations, particularly when dealing with real-time data streams. As Bruce Schneier aptly put it:

Security is a process, not a product.

Security Control Technologies for Real-Time Data Streams: Performance vs Protection

Securing real-time data streams requires a mix of cryptography, privacy measures, and constant monitoring. These strategies address vulnerabilities found in both autonomous systems and third-party risks. Research suggests that no single tool can fully solve AI security challenges - organizations need a flexible, layered approach.

Real-time data demands constant oversight. Static security checks just don't cut it. Companies need to integrate verifiability and integrity checks throughout the AI pipeline. This ensures that model inference happens on the correct model and that the training data adheres to security standards. Trusted Execution Environments (TEEs) play a key role here, enabling ongoing verification of sensitive computations at the hardware level.

Side-channel attacks pose a serious risk. For example, remote timing attacks can pinpoint sensitive topics with alarming accuracy.

In response to such threats, Microsoft and OpenAI introduced obfuscation in November 2025. By adding random, variable-length text under an "obfuscation" key in streaming responses, they reduced the risk of topic inference attacks. Similarly, Mistral added a "p" parameter to their API responses, injecting noise to prevent attackers from reconstructing plaintext tokens from encrypted packet sizes.

Beyond obfuscation, automated tools for testing factual accuracy and spotting vulnerabilities are essential. Zero-Knowledge Proofs allow third parties to confirm that model inference happened correctly without exposing sensitive data or proprietary model details. As cybersecurity expert Mihai Christodorescu pointed out:

The collective experience of decades of cybersecurity research and practice shows that [hardening a single model] is insufficient... continuous improvement of security posture is a tried-and-tested approach.

These continuous testing measures naturally lead to the importance of robust access controls to limit privilege-based vulnerabilities.

Traditional Role-Based Access Control (RBAC) isn't enough for AI systems. Organizations need to adopt the principle of least agency, restricting agents from chaining actions that may seem harmless individually but could collectively bypass safeguards or escalate privileges. This is a growing concern, as nearly half of global CISOs report increasing security risks tied to AI.

Strengthening protocols begins with standardization. The Model Context Protocol (MCP) offers a Host-Client-Server architecture that simplifies dynamic tool discovery and execution, reducing the need for manual API tracking. As Vineeth Sai Narajala from Amazon Web Services emphasized:

If we fail to adapt our threat models and defenses to account for their unique architecture and behavior, we risk turning a powerful new tool into a serious enterprise liability.

For Retrieval-Augmented Generation (RAG) systems, Private Information Retrieval (PIR) ensures external databases can't infer specific queries or usage patterns. Identity coherence is crucial as well, establishing clear boundaries between agent, user, and system identities to prevent spoofing and ensure proper authorization in multi-agent setups. Regular TEE attestations verify that execution environments remain secure, while monitoring inter-arrival times can detect exploitation attempts, such as those targeting speculative decoding techniques.

Building hardened protocols lays the groundwork for evaluating the maturity of security controls across various technologies.

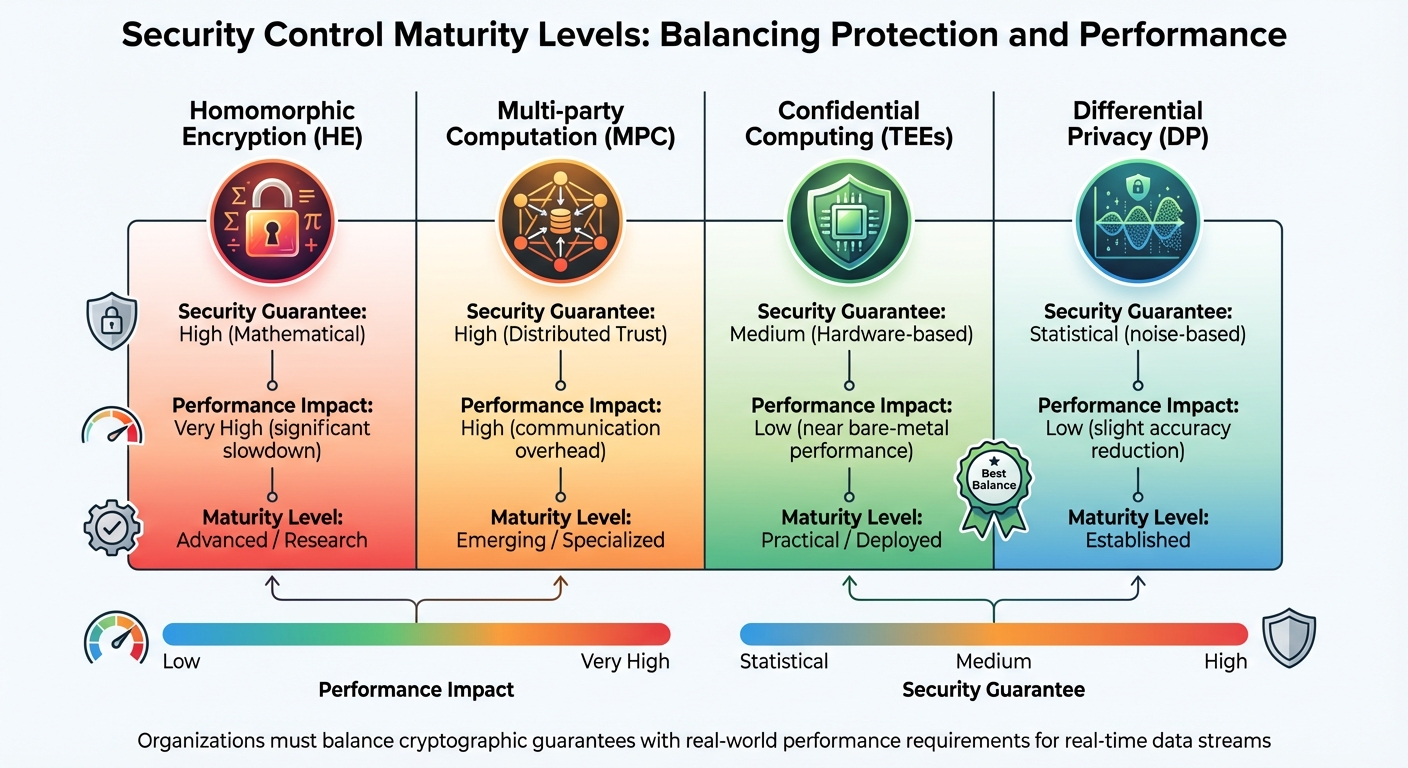

When choosing defenses for real-time data streams, organizations must balance security effectiveness with performance trade-offs. Here's a comparison of four major security technologies:

| Control Technology | Security Guarantee | Performance Impact | Maturity Level |

|---|---|---|---|

| Homomorphic Encryption (HE) | High (Mathematical) | Very High (significant slowdown) | Advanced / Research |

| Multi-party Computation (MPC) | High (Distributed Trust) | High (communication overhead) | Emerging / Specialized |

| Confidential Computing (TEEs) | Medium (Hardware-based) | Low (near bare-metal performance) | Practical / Deployed |

| Differential Privacy (DP) | Statistical (noise-based) | Low (slight accuracy reduction) | Established |

Confidential Computing with TEEs strikes a balance for many real-time applications, offering hardware-based isolation with minimal performance impact. While it doesn't provide the mathematical guarantees of Homomorphic Encryption, it is far more practical for large-scale cloud deployments. Differential Privacy works well in scenarios where statistical guarantees are acceptable, although it can slightly reduce model accuracy.

Security controls evolve through phases. Organizations typically start with basic data reduction, move to pattern recognition, advance to automated intelligence, and eventually operationalize intelligence with autonomous systems. As Robert A. Bridges noted:

The true potential of this technology lies in creating autonomous, self-improving deception systems to counter the emerging threat of intelligent, automated attackers.

Context-aware firewalls that monitor retrieval, response, and prompt streams in real time can help prevent data leaks. With around 90% of organizational data being unstructured and increasingly fueling real-time AI applications, comprehensive security measures are more critical than ever.

Protecting real-time data streams is no small task, as it involves tackling several tough challenges. Encryption, for instance, often comes with performance trade-offs. Organizations must decide between hardware-based solutions like Trusted Execution Environments, which can be faster, and cryptographic methods, which tend to introduce high latency.

Data leakage is another ongoing concern in real-time pipelines. For example, timing attacks can analyze encrypted traffic and identify conversation topics with over 90% accuracy. Banghua Zhu from the University of California, Berkeley, highlights this risk:

GenAI models are not good at keeping secrets.

This underscores the danger of training models on private data, as it could lead to unintended exposure.

Adding to the complexity, supply chain vulnerabilities create more opportunities for breaches. The involvement of multiple internal and external contributors means there are numerous points where unauthorized access or manipulation could occur. From data ingestion to model inference, every step in the chain demands independent verification and constant monitoring to guard against threats like poisoning attacks or data theft.

To address these challenges, a multi-layered security strategy is key. Relying on a combination of selective encryption, continuous monitoring, and hardware-based isolation can provide practical safeguards. With real-time systems processing as much as 30 terabytes of data daily, finding the right balance between robust security and smooth operational performance is critical for protecting these high-speed data streams.

To keep real-time data streams both fast and secure, organizations should focus on refining their encryption methods and policies. Leveraging hardware-accelerated encryption algorithms - such as AES-GCM or ChaCha20-Poly1305 - provides robust security while keeping performance impact minimal. Combining these algorithms with TLS 1.3 can also help by reducing handshake delays and enabling quicker session resumption.

Another effective strategy is selective encryption, where only sensitive information is encrypted. This reduces the processing load while still safeguarding critical data. For added security and efficiency, confidential-computing enclaves can be used. These allow data to be decrypted and processed securely within protected memory zones, cutting down on latency without sacrificing security.

Organizations can also adopt adaptive security policies that adjust encryption strength based on the sensitivity of the data stream. By monitoring key performance metrics like latency and CPU usage, they can fine-tune their approach. This ensures that real-time data streams remain fast, secure, and dependable - especially for AI-driven applications.

Real-time AI systems grapple with a host of security issues, especially when they need to process data with sub-second latency. Even encrypted data in query streams can unintentionally reveal sensitive information. How? Adversaries can exploit patterns in timing, metadata, or partial encryption to deduce the original inputs. Side-channel attacks - like analyzing token lengths or response times - can also bypass encryption, exposing details such as language model outputs or spoken-text data.

In the race for speed, many systems compromise security. Lightweight encryption or skipping end-to-end protection altogether can leave systems vulnerable. Even when encryption is in place, shortcuts like shorter key lengths or faster, less secure ciphers can open the door to interception or decryption.

NanoGPT tackles these challenges head-on by processing all inputs and outputs directly on the user’s device. This approach eliminates network exposure, ensuring both data privacy and the ultra-fast performance users demand.

Supply chain weaknesses can open unnoticed doors for attackers aiming at real-time data streams. Vulnerable elements like third-party libraries, firmware, or cloud integrations can expose sensitive information as it moves through streaming pipelines. This creates an opportunity for malicious actors to intercept or manipulate the data before encryption or processing takes place.

Real-time data streams demand quick encryption and rapid processing to function effectively. Any flaws in the supply chain can disrupt performance, add delays, and jeopardize data confidentiality. Protecting every link in the supply chain is critical to safeguarding the integrity, privacy, and speed of real-time AI data streams.