Apr 2, 2025

Semantic parsing helps large language models (LLMs) understand and process natural language better. It converts human language into structured formats, improving context understanding and response accuracy. Here’s what you need to know:

Semantic parsing is evolving, but challenges like handling complex language and resource demands remain. Future improvements aim to enhance efficiency, context retention, and domain-specific adaptability.

Semantic parsing methods now combine older techniques with modern neural architectures to improve language understanding.

Traditional rule-based systems rely on predefined grammar and linguistic patterns. These systems are predictable but often struggle with language variations and shifts in context. Neural network approaches, on the other hand, learn directly from data, making them more flexible and adaptable to different scenarios.

Here’s how they compare:

| Aspect | Rule-Based Systems | Neural Networks |

|---|---|---|

| Flexibility | Limited to predefined rules | Learns from data patterns |

| Maintenance | Requires manual updates | Improves with more data |

| Processing Speed | Generally faster | More computationally demanding |

| Context Awareness | Limited | Strong contextual understanding |

| Error Handling | Strict and rigid | Handles errors more gracefully |

Transformers have changed the game for semantic parsing by allowing models to process sequences in parallel. This capability brings several benefits:

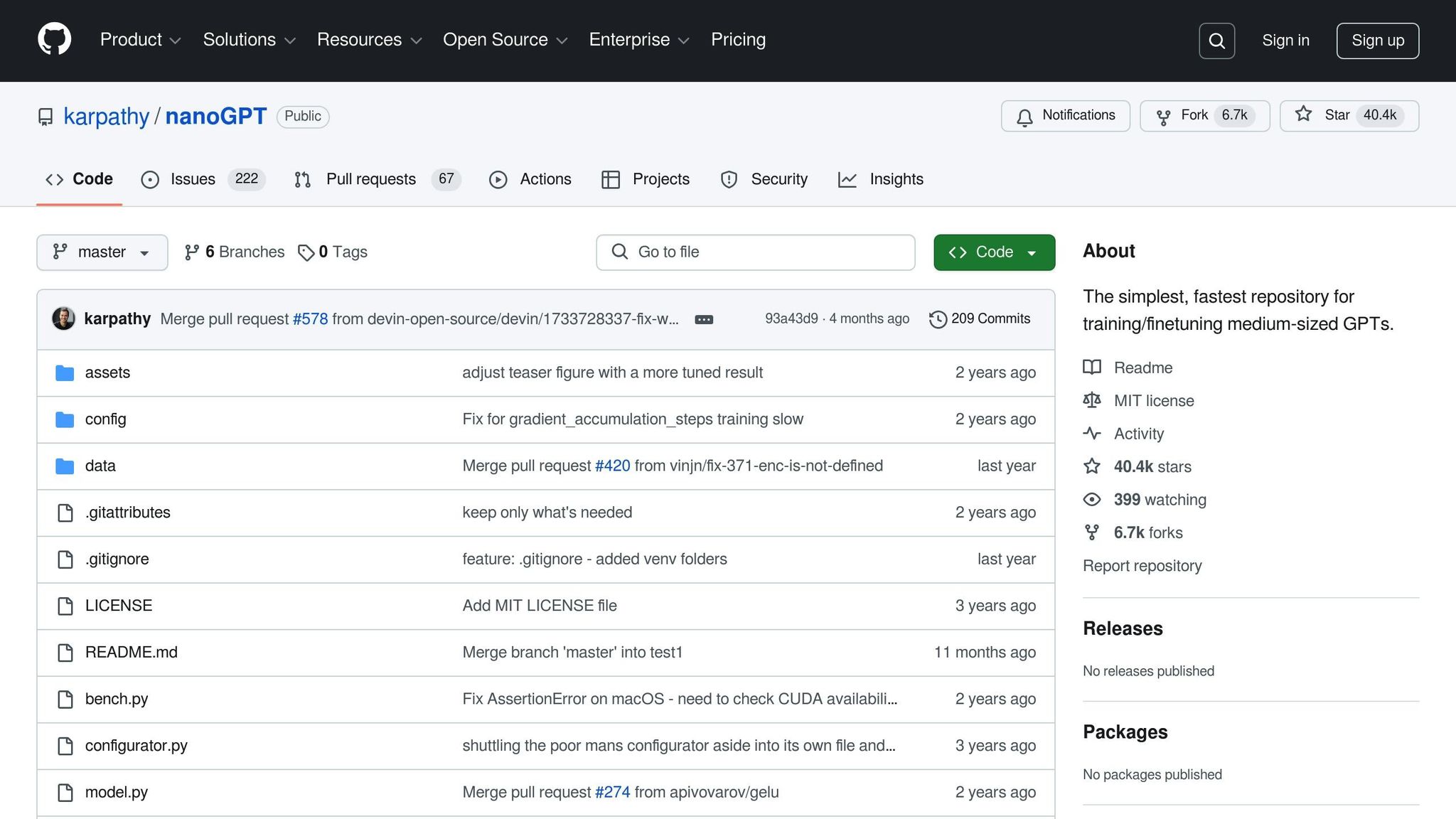

NanoGPT utilizes this architecture to maintain context effectively, even in complex queries.

The way prompts are designed plays a huge role in how accurately semantic parsing works. A well-structured prompt helps guide the model’s understanding and response. Here are some key principles:

These methods together form a strong basis for improving semantic parsing while addressing its current challenges.

Even with progress in semantic parsing for large language models (LLMs), several hurdles remain. While transformer models and better prompt engineering have improved performance, challenges related to data, language complexity, and system constraints still hold back their full potential.

These data challenges make it harder for models to handle intricate language tasks effectively.

| Language Feature | Challenge | Impact on Accuracy |

|---|---|---|

| Nested Clauses | Struggles with deeper nesting levels | Accuracy drops after 3-4 levels of nesting |

| Idiomatic Expressions | Fails to grasp figurative meaning | Misinterprets idioms literally |

| Ambiguous References | Poor entity tracking | Errors in resolving pronouns |

| Long-range Dependencies | Limited memory for long contexts | Loses track of earlier context in lengthy texts |

These linguistic hurdles make it difficult for LLMs to fully understand and process nuanced or complex inputs.

In addition to language-specific issues, system limitations also play a role. Semantic parsing requires extensive computational power. Even with optimized architectures, significant GPU memory and processing time are necessary. Attention mechanisms, which are central to understanding context, scale poorly with longer inputs, as memory usage grows quadratically with text length.

This creates a balancing act between speed, accuracy, and resource demands. Tools like NanoGPT attempt to ease some of these constraints by focusing on local processing, but the trade-offs remain unavoidable.

Advancements in semantic parsing have significantly improved large language models (LLMs), enhancing their ability to process and understand natural language more effectively.

New techniques have strengthened how LLMs interpret context. Enhanced attention mechanisms and parsing methods now allow models to work with longer, more intricate text while staying coherent. These updates make it possible for models to perform well even when data is limited.

Recent developments have made it easier to perform semantic parsing with smaller datasets. Methods like transfer learning, few-shot learning, and self-supervised learning are particularly helpful for specialized tasks where large annotated datasets are unavailable. Tools such as NanoGPT are already putting these methods into action.

NanoGPT showcases how theoretical advancements translate into practical solutions, focusing on:

By integrating these features, NanoGPT applies cutting-edge techniques to deliver privacy-conscious and efficient language processing solutions.

These advancements are shaping more capable language systems that can tackle increasingly complex tasks with ease.

Recent progress in semantic parsing has significantly boosted the ability of large language models (LLMs) to interpret natural language. Improved parsing methods now allow for better contextual understanding and more accurate responses. This is especially useful for tasks like question-answering systems and conversational tools. Additionally, NanoGPT's localized processing has effectively tackled privacy concerns while maintaining strong language comprehension.

Moving forward, there are specific areas to refine and enhance:

These efforts aim to create more advanced tools that rely on semantic parsing for handling complex language tasks, building on the progress outlined in this article.