Apr 10, 2025

Semantic coherence ensures that AI-generated text flows logically and ideas connect clearly. Here are 5 key metrics to evaluate it effectively:

| Metric | Best Used For | Strength | Limitation |

|---|---|---|---|

| BERTScore | Complex text evaluation | Contextual understanding | High computing power needed |

| PMI | Word relationship analysis | Statistical reliability | Limited to word pairs |

| Cosine Similarity | Document comparison | Quick computation | Lacks context sensitivity |

| SMS | Flow and readability | Sentence-level insights | Needs longer text for accuracy |

| Word Embeddings | Semantic relationships | Preserves meaning | Dependent on training data quality |

Each metric has strengths and trade-offs. Choose based on your goals, text type, and available resources. For deeper analysis, combine multiple metrics for better results.

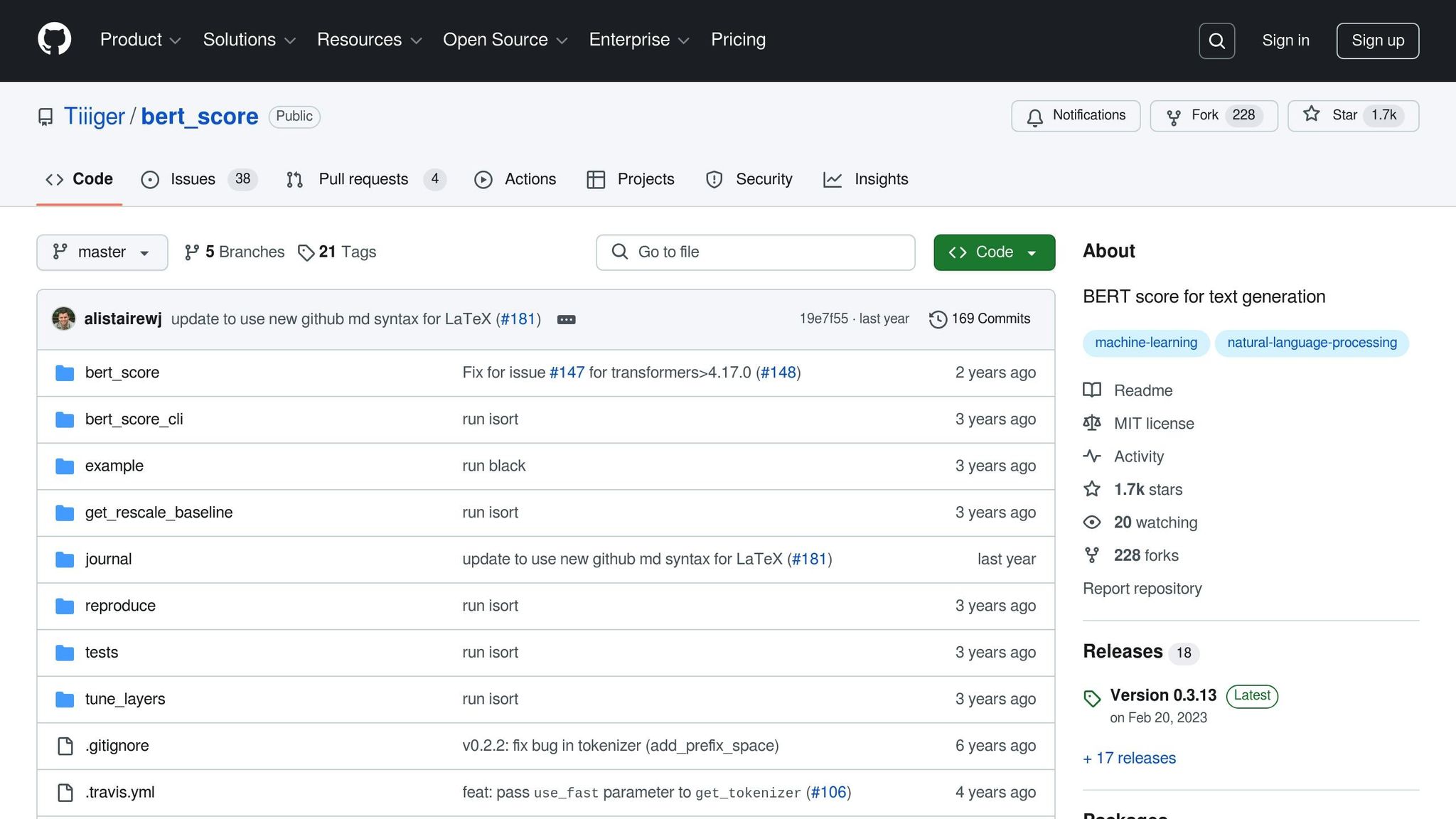

BERTScore evaluates the meaning of text by leveraging BERT embeddings, moving beyond traditional metrics that rely on exact word matches. Instead, it focuses on the context and relationships between words and phrases within their surrounding text.

Here’s how it works: BERTScore computes similarity between reference and candidate text using three main components:

This method excels at identifying semantic similarities that older metrics might miss. For example, when testing NanoGPT's AI-generated content, BERTScore helps ensure quality by catching nuanced matches. Take this example:

While traditional metrics might flag these as different, BERTScore recognizes their shared meaning.

For tasks like technical documentation or creative writing, BERTScore works best when combined with other metrics to capture nuanced semantic relationships that simpler methods might overlook.

Pointwise Mutual Information (PMI) measures how often words appear together compared to how often you'd expect them to by chance. It's a useful tool for checking how well AI-generated content holds together semantically.

PMI uses a logarithmic scale to evaluate word relationships:

For instance, PMI can highlight whether word combinations in generated text make sense. Take these examples:

| Word Pair | PMI Score | Interpretation |

|---|---|---|

| Coffee + Brew | +4.2 | Strong, logical association |

| Ocean + Salt | +3.8 | Makes sense, expected pairing |

| Pizza + Cloud | -2.1 | Odd and unlikely combination |

These scores help evaluate how natural or logical word pairings are in a given text.

PMI is great for spotting semantic issues that could affect how coherent a text feels:

Despite its usefulness, PMI has some challenges:

To get the most out of PMI for evaluating text coherence:

PMI is a solid way to measure how well words are connected within a text. When combined with other tools like BERTScore, it offers an objective way to assess how natural and coherent content feels.

Cosine similarity measures how closely related two pieces of text are by comparing their word embedding vectors. These vectors place words in a high-dimensional space, and the method calculates the cosine of the angle between two vectors. A score close to 1 means the texts are closely related in meaning, while a lower score suggests less similarity. This vector-based method works well alongside context-based approaches, setting the stage for analyzing text flow metrics in the following section.

SMS measures how well sentences connect and flow by analyzing their semantic relationships. It builds on Word Mover's Distance but operates at the sentence level.

To do this, SMS generates sentence embeddings and calculates the minimum transformation cost between different text segments. It examines both local coherence (connections between adjacent sentences) and global coherence (the overall structure of the text). Scores range from 0 to 1, with higher scores showing smoother flow and better organization.

What makes SMS stand out is its ability to assess how well a text is organized. It can pinpoint logical progressions and detect abrupt shifts in ideas.

Here are the three main components of SMS:

To use SMS, combine semantic similarity analysis with sentence structure evaluation to ensure a natural and logical flow of ideas.

This metric is incredibly useful for tasks like automated content evaluation and reviewing AI-generated text. It provides a detailed look at how well ideas connect and transition throughout a piece.

Word embeddings turn words into dense numerical vectors in high-dimensional space, grouping similar words together based on their meanings. These metrics are essential for analyzing how well text maintains semantic coherence during generation.

By converting text into mathematical representations, word embedding metrics evaluate how consistently the generated text reflects relationships between words and concepts.

The evaluation process includes three main elements:

Several factors impact the effectiveness of word embeddings:

Unlike Sentence-Moving Similarity (SMS), which evaluates sentence-level flow, word embeddings focus on individual word relationships. Combining these metrics with SMS provides a more thorough assessment of text quality.

Word embedding metrics help ensure consistent terminology, logical word choices, clear progression, and accurate domain-specific language. They offer a quantitative way to measure semantic coherence in various contexts.

This section wraps up the metrics discussed earlier, highlighting their trade-offs to help you refine your approach. The goal is to select the metric that aligns best with your evaluation needs.

Here's a quick breakdown of the metrics and their pros and cons:

| Metric | Best Used For | Key Strength | Primary Limitation |

|---|---|---|---|

| BERTScore | Complex text evaluation | Contextual understanding | Requires significant computing power |

| PMI | Word relationship analysis | Statistical reliability | Limited to analyzing word pairs |

| Cosine Similarity | Document comparison | Quick computation | Lacks sensitivity to context |

| SMS | Flow and readability | Sentence-level insights | Needs longer text for accuracy |

| Word Embeddings | Semantic relationships | Preserves meaning | Relies on training data quality |

This table provides a clear overview of when to use each metric and what to watch out for.

For quick, real-time tasks, Cosine Similarity is a solid choice due to its speed. On the other hand, if you need deeper accuracy and contextual analysis, BERTScore is worth the extra processing effort.

Word embeddings are especially useful for domain-specific tasks, ensuring terminology and concepts stay consistent throughout the text.

To streamline your evaluation process:

As natural language processing continues to evolve, these metrics will become even more refined. The key is to choose the right tool for your specific requirements while keeping practical limitations in mind.